Samaaro + Your CRM: Zero Integration Fee for Annual Sign-Ups Until 30 June, 2025

- 00Days

- 00Hrs

- 00Min

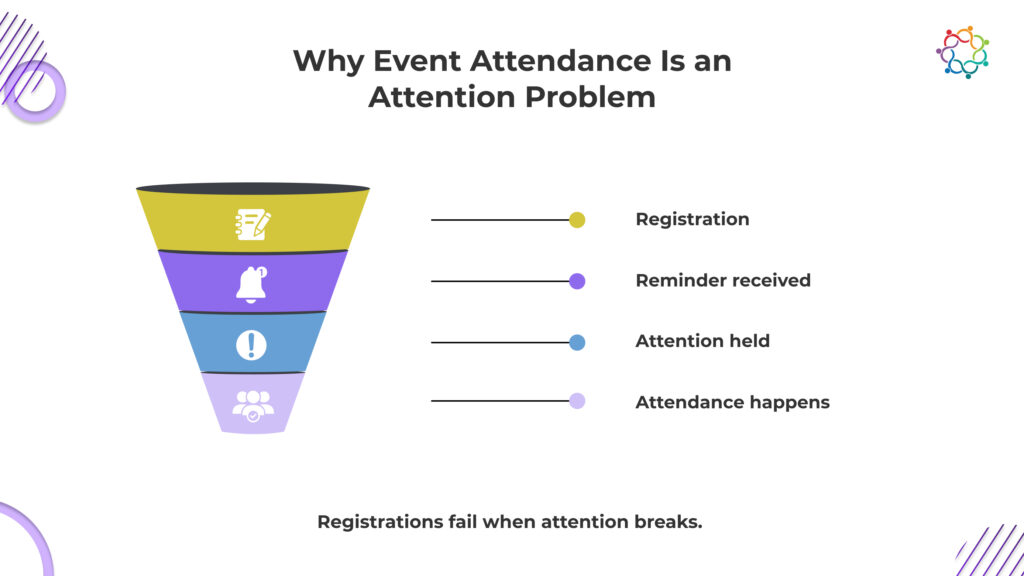

Event marketing rarely fails because of weak programming or poor promotion. It fails because attention is scarce. B2B teams continue to celebrate registrations and eventually see attendance fall sharply as the event approaches. Reminders are sent, posts are published, and calendars are blocked, but follow-through still remains unreliable.

The issue is not awareness. It is timing and proximity. Inbox fatigue means event emails are buried under internal threads and automation noise. A further degree of unpredictability is introduced by social platforms, where algorithms determine whether an event message is ever viewed. The choice moment has frequently passed by the time an attendee notices an update.

In 2026, event attendance is increasingly shaped by channels that operate closer to real behavior. People show up when communication feels direct, relevant, and human. This shift is why WhatsApp event marketing is gaining strategic importance. It aligns with how professionals coordinate important commitments, turning intent into action rather than letting attention slip away.

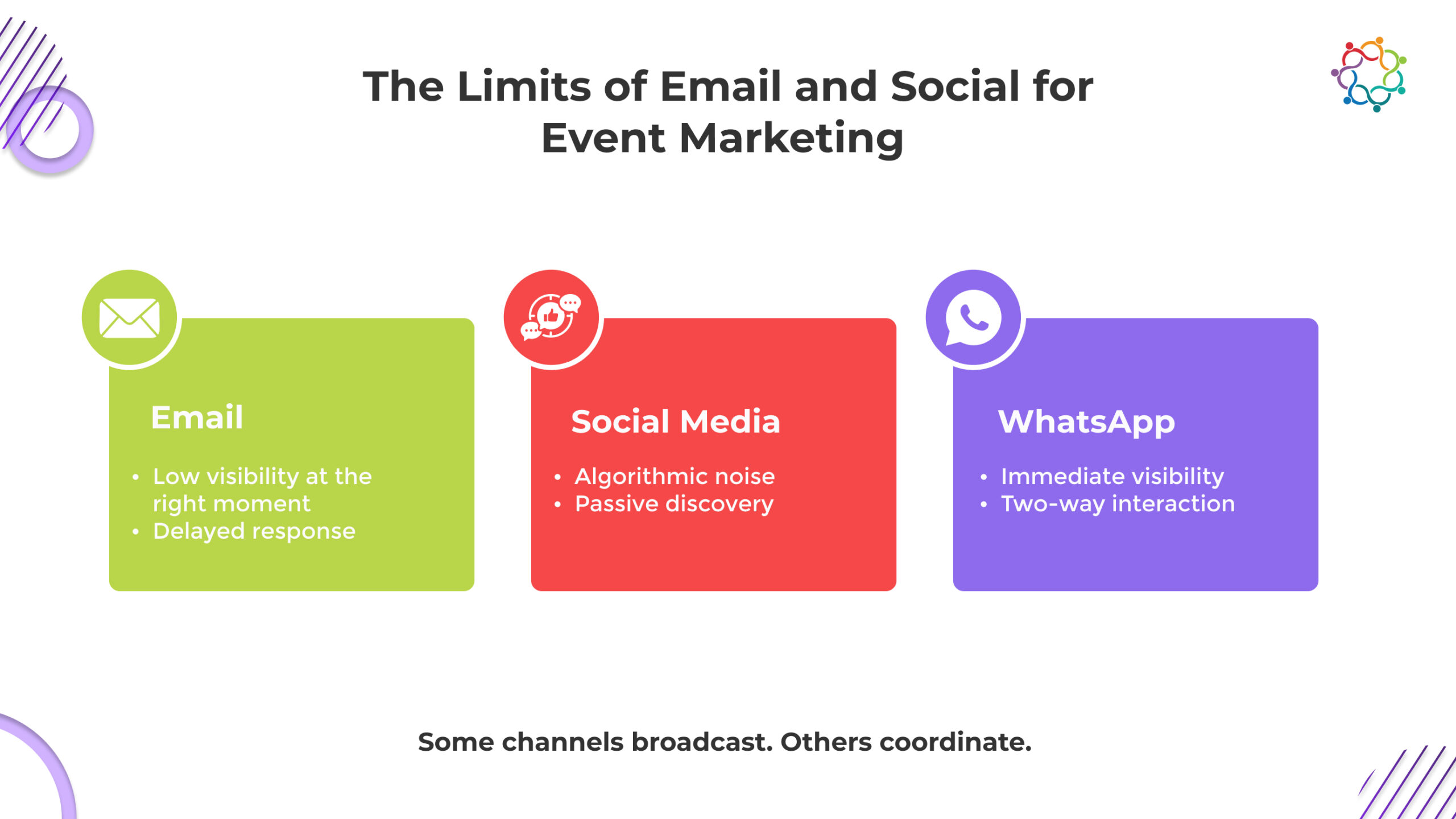

Email and social media remain foundational channels, but their structural limitations are increasingly visible in event contexts. Email is asynchronous by design. Messages arrive alongside dozens of others, are opened hours later, or never at all. Even when opened, they rarely invite immediate action. Social platforms amplify reach but dilute intent. Posts are public, fleeting, and rarely tied to a specific moment of decision.

Several structural constraints explain why these channels underperform for events:

When attendance drops, teams often respond by increasing frequency. This approach treats the problem as executional when it is behavioral. People skip events because the event never crossed their attention at the right moment or felt personally relevant.

These limitations are simply not designed for high-touch, time-sensitive coordination. In long-cycle B2B environments, this gap becomes expensive. Event marketing on WhatsApp begins to surface as a response to this structural mismatch rather than a tactical experiment.

WhatsApp operates in a setting that people already identify with urgency, trust, and genuine conversation, which sets it apart from conventional event marketing methods. Passive consumption and broadcasting are not intended uses for WhatsApp. It is built for response. This difference in behavior explains why WhatsApp performs so differently for event marketing in 2026.

WhatsApp communication begins with explicit consent. When someone opts in, they mentally categorize messages as relevant and personal rather than promotional. This opt-in intimacy changes how messages are received. Event updates feel like coordination, not marketing. This trust-based communication increases read rates and reduces resistance, especially in high-touch B2B environments where relevance matters more than volume.

WhatsApp messages are typically read within minutes, not hours or days. This response velocity matters most close to the event date, when decisions to attend are made. Quick replies allow teams to confirm attendance, resolve doubts, and adjust communication in real time. Email and social media lack this urgency, making them unreliable at decision moments.

WhatsApp keeps all event-related communication in a single, continuous thread. Context is never lost. Logistics, value reminders, and questions build on each other instead of resetting with every message. This conversation continuity reduces confusion and effort for attendees, making follow-through easier and more natural.

Because WhatsApp lives in a high-attention space, messages are rarely ignored. People either respond, ask questions, or act. This makes WhatsApp an attention channel rather than a promotion tool. For event teams, this behavioral pattern is what makes WhatsApp event marketing structurally different from email and social, and increasingly more effective.

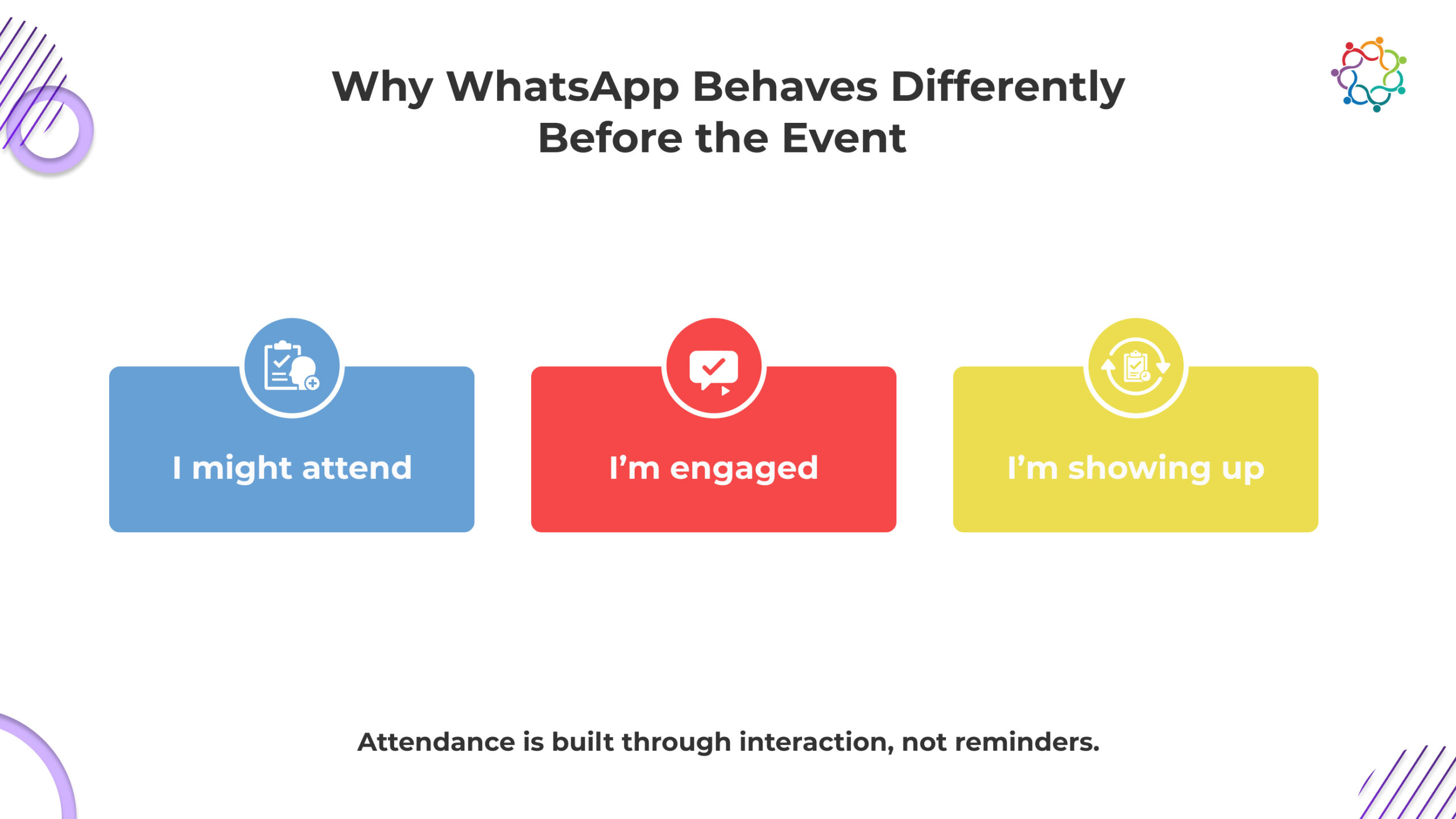

Before an event, the most important objective is not promotion but commitment. Registration is a signal of interest, not a guarantee of attendance. WhatsApp allows teams to bridge this gap by shifting communication from reminders to alignment.

Instead of sending passive messages, teams can use two-way confirmation to clarify expectations, answer questions, and reinforce value. This interaction reduces uncertainty, which is a major cause of drop-offs. When attendees can quickly confirm logistics or relevance, their likelihood of showing up increases.

WhatsApp also supports contextual messaging. Rather than generic countdown emails, messages can reference specific sessions, speakers, or outcomes relevant to the attendee.

Key ways WhatsApp supports pre-event follow-through include:

This approach reframes attendance as behavioral alignment rather than marketing pressure. By the time the event begins, attendees who remain engaged are mentally committed, not just registered. This is where WhatsApp event marketing begins to outperform traditional event communication strategies.

(Also Read: How to Maximize Event Registrations with WhatsApp Groups: Tips and Tricks)

Once an event begins, attention becomes even more fragile. Attendees are moving between sessions, conversations, and competing priorities. At this stage, communication must be relevant and smooth. WhatsApp changes live event engagement because it functions as a real-time coordination layer rather than a broadcast channel. This help team supports attendees without pulling them away from the experience.

Teams can use WhatsApp to deliver accurate and timely updates, including reminders for sessions, room changes, or schedule modifications. These gentle reminders cut down on misunderstandings and lost opportunities without overwhelming participants. Messages are viewed and responded to promptly because they arrive in a trusted setting, which increases their involvement without making noise.

Questions and concerns are sometimes left unanswered during events because participants are reluctant to speak in public or are unable to locate the appropriate contact. WhatsApp makes it simpler to exchange comments, highlight problems, and ask questions by facilitating private or small-group communication. Teams are able to resolve conflict before it affects the experience and deepen engagement through this real-time conversation.

WhatsApp gathers feedback during the event, as opposed to after it has already happened. Real-time responses to sessions, presenters, or logistics are available to attendees. Instead of assessing problems after the event, this real-time information enables teams to make changes quickly, increasing engagement and happiness.

WhatsApp works best when it supports the event instead of competing with it. By focusing only on essential communication, it keeps attendees informed without distracting them from sessions or conversations. This balance is what makes WhatsApp effective as a live engagement layer rather than a content feed.

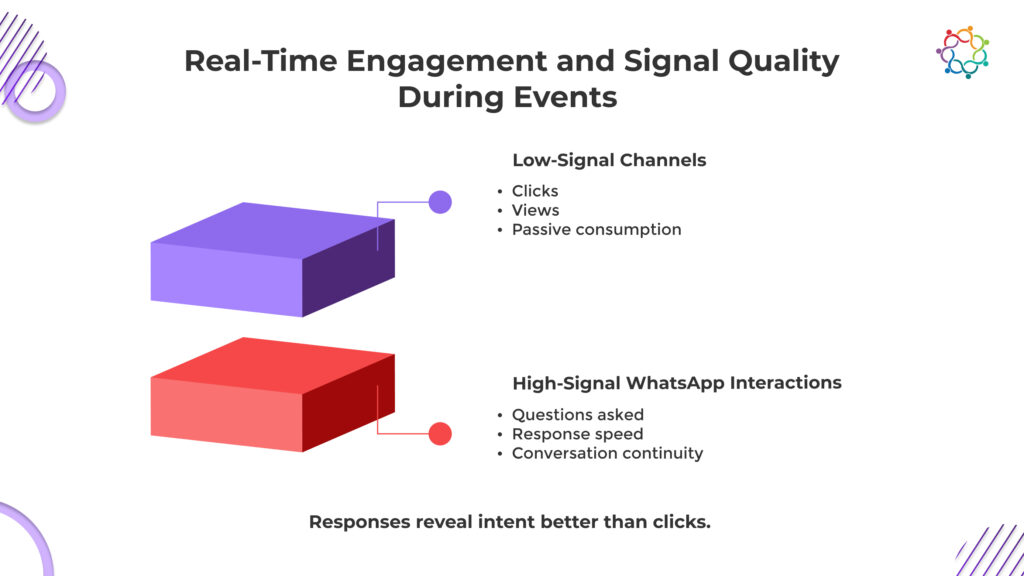

Event marketers often struggle with weak engagement data. Clicks and opens provide limited insight into intent. WhatsApp interactions, however, generate richer behavioral signals that are easier to interpret directionally.

Who responds, how quickly they reply, and what they ask reveal more than passive metrics. Response timing acts as a proxy for urgency or interest. Questions indicate relevance. Silence signals disengagement. These patterns emerge naturally within conversations.

WhatsApp also highlights differences between group and one-to-one engagement. Group interactions reveal collective interests, while private messages surface individual concerns or buying signals. This distinction helps teams prioritize follow-up without relying on complex attribution models.

Important signal types include:

These insights matter because they connect engagement to behavior, not vanity metrics. While WhatsApp does not solve attribution, it provides clarity that email and social media cannot. This signal richness is a core reason why WhatsApp event marketing is gaining strategic relevance in 2026.

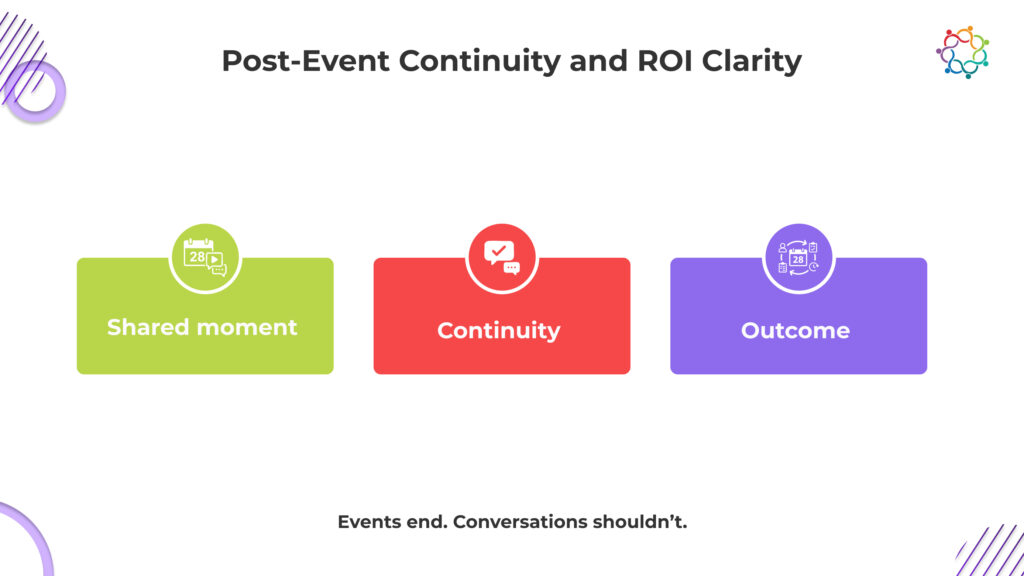

Most event communication collapses after the event ends. Attendees receive generic recap emails that summarize sessions but fail to continue the conversation. Social posts highlight photos rather than outcomes. Momentum fades quickly.

WhatsApp supports post-event continuity because the conversation never resets. Follow-ups feel natural rather than intrusive. Teams can reinforce key moments, share relevant resources, and transition into sales or relationship conversations without switching channels.

This continuity reduces friction. Attendees do not need to reorient themselves or search for context. The thread already contains the event journey. This makes follow-up messaging more relevant and timely.

Effective post-event WhatsApp communication focuses on:

By acting as a bridge rather than a blast tool, WhatsApp extends the event’s value beyond the live moment. This sustained engagement is difficult to achieve through traditional event follow-up messaging channels.

Event ROI has always been challenging to prove because outcomes are indirect and delayed. WhatsApp does not magically solve attribution, but it improves clarity by reducing drop-offs between stages.

When engagement happens in a conversational environment, it is easier to observe how attention turns into action. Fewer steps are lost between registration, attendance, and follow-up. This simplifies analysis even if it remains imperfect.

WhatsApp also lowers the cost per meaningful interaction. Instead of spending on repeated broadcast messages, teams invest in fewer, more relevant touchpoints. This efficiency matters in high-touch, long-cycle markets where quality outweighs volume.

ROI becomes clearer through:

This practical clarity strengthens the case for WhatsApp as a primary event engagement channel rather than a supporting tactic.

WhatsApp is not universally effective. Its strength depends on context, audience expectations, and discipline. A realistic assessment builds credibility with senior stakeholders.

WhatsApp excels in events that are high-touch, time-sensitive, and relationship-driven. It underperforms in large, anonymous events where opt-in intimacy is unrealistic. Overuse or aggressive messaging quickly erodes trust.

Key considerations include:

Opt-in discipline is critical. WhatsApp should never feel compulsory or excessive. When used with restraint, it strengthens trust-based communication. When abused, it damages it.

Understanding these boundaries ensures WhatsApp remains effective rather than intrusive.

In 2026, strong event marketing is not about expanding the channel mix. It is about using fewer channels with clearer intent. Audience attention has consolidated around spaces built for response, not broadcast. Channel strategy must reflect how people actually act, not how teams are used to communicating.

Channel choices should be driven by behavior, not habit. Messaging channels outperform broadcast channels when the goal is coordination, commitment, and follow-through.

Communication should align with decision moments. High-attention channels should be reserved for points where attendees choose to register, engage, or show up. Overusing them dilutes impact.

WhatsApp works best with boundaries. Email remains the system of record. Social channels maintain visibility. WhatsApp should be used selectively to drive confirmation, urgency, and real-time engagement, not as a replacement for everything else.

Reducing channels increases trust. When each channel has a defined role, messages feel intentional, are acted on faster, and create less friction for attendees.

Event success follows attention, not exposure. In 2026, attention lives in conversations that feel relevant. Messaging platforms have become central to how people coordinate important activities.

WhatsApp event marketing wins because it aligns with real behavior. It supports attendance follow-through, real-time engagement, and post-event continuity without relying on hype or volume. When used with consent and restraint, it strengthens trust and clarity.

The strategic takeaway is simple. Choose channels where people respond. Design events around attention, not assumptions. Conversations determine outcomes.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

Sales teams have never lacked leads. What they lack is confidence in the conversations those leads represent. Over the last decade, large-scale events have optimized for reach, visibility, and volume, but sales outcomes have not kept pace. Attendance numbers look impressive, dashboards stay green, yet sellers still struggle to understand who is genuinely interested and why. This disconnect has pushed revenue leaders to look more closely at smaller, focused formats that behave differently.

Micro-events change the equation because they feel less like marketing broadcasts and more like real sales conversations. When attendance is intentional, and discussion is central, intent becomes visible. Sales hears the questions buyers ask, the objections they raise, and the urgency behind their interest. That is why Micro-events for B2B sales are gaining credibility inside revenue organizations, even when they are still underestimated on paper.

The tension this creates is structural. Marketing may still frame micro-events as lighter experiments or relationship plays, while sales experiences them as productive working sessions. This blog exists to resolve that tension. It reframes micro-events not as an alternative event format, but as a repeatable sales channel that produces higher-quality interactions and clearer follow-up signals than many scaled tactics.

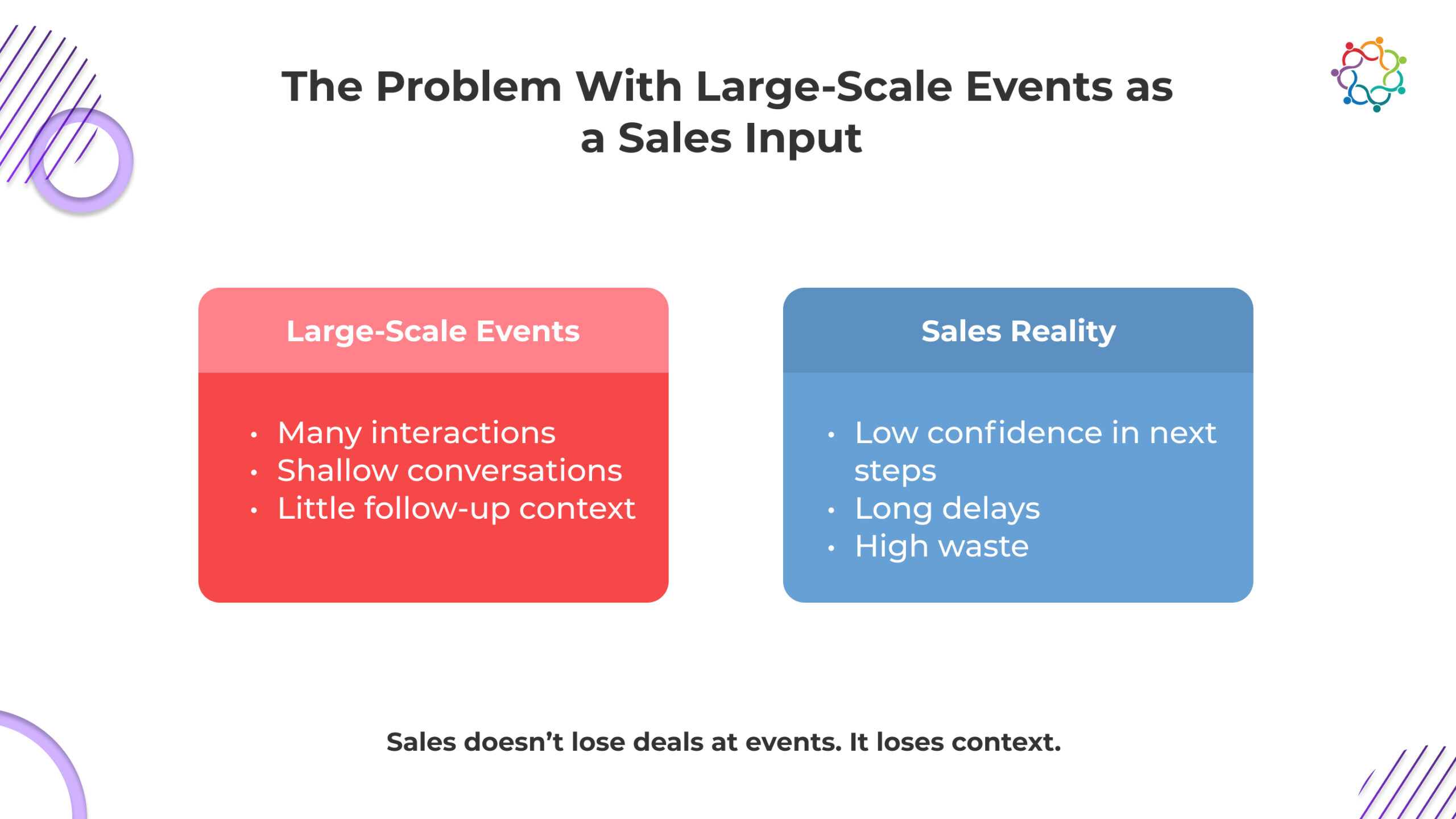

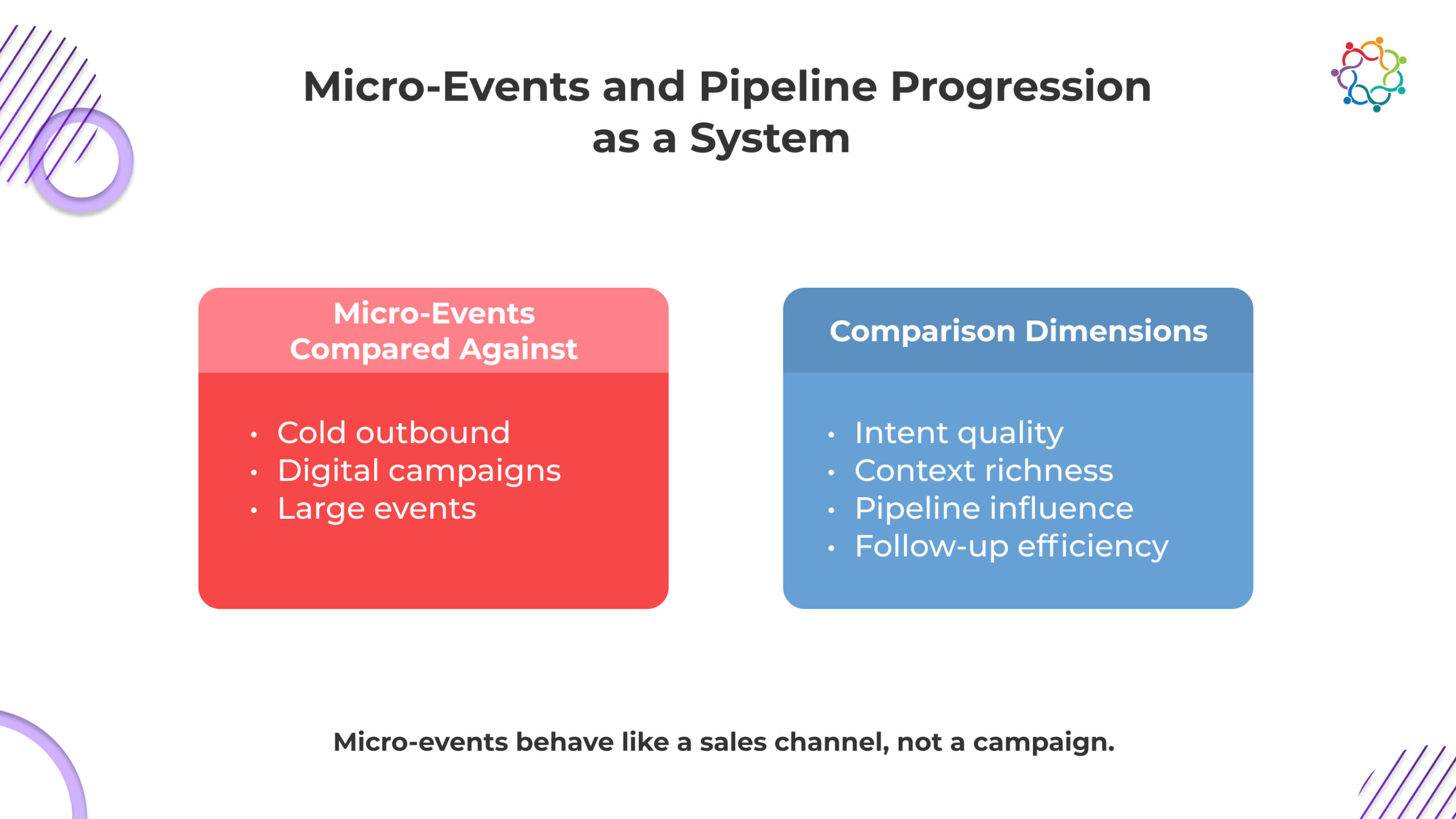

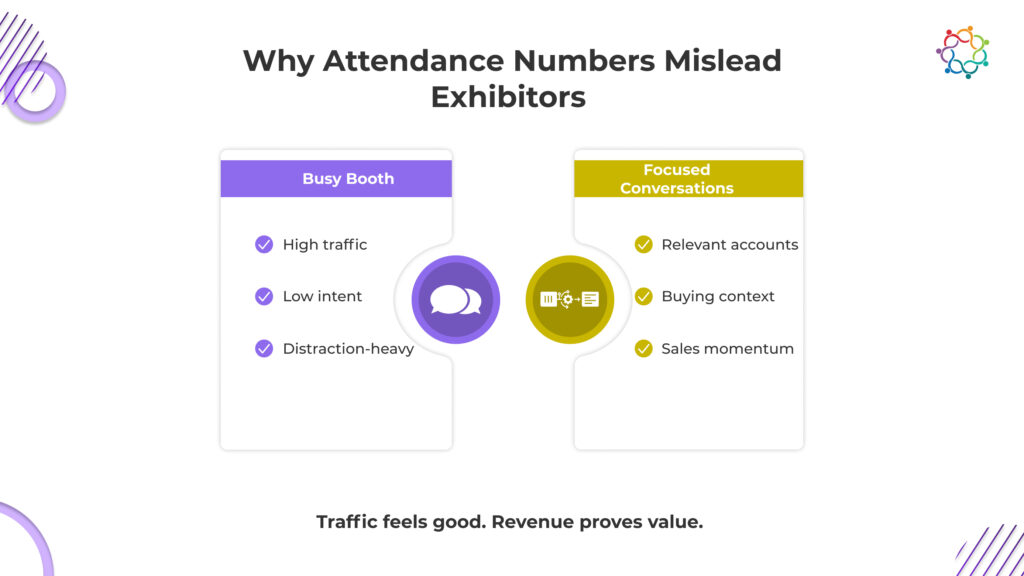

Large-scale events consistently underperform as a sales input, not because teams execute them poorly, but because their structure works against how sales evaluates value. From a revenue perspective, these events introduce friction that is difficult to overcome after the fact. This is why Micro-events for B2B sales are increasingly viewed as a corrective, not a replacement.

Big events attract a wide mix of attendees, many of whom are not in an active buying stage. Sales conversations happen, but they are often exploratory or casual. This makes it difficult for sales to separate genuine buying intent from general curiosity.

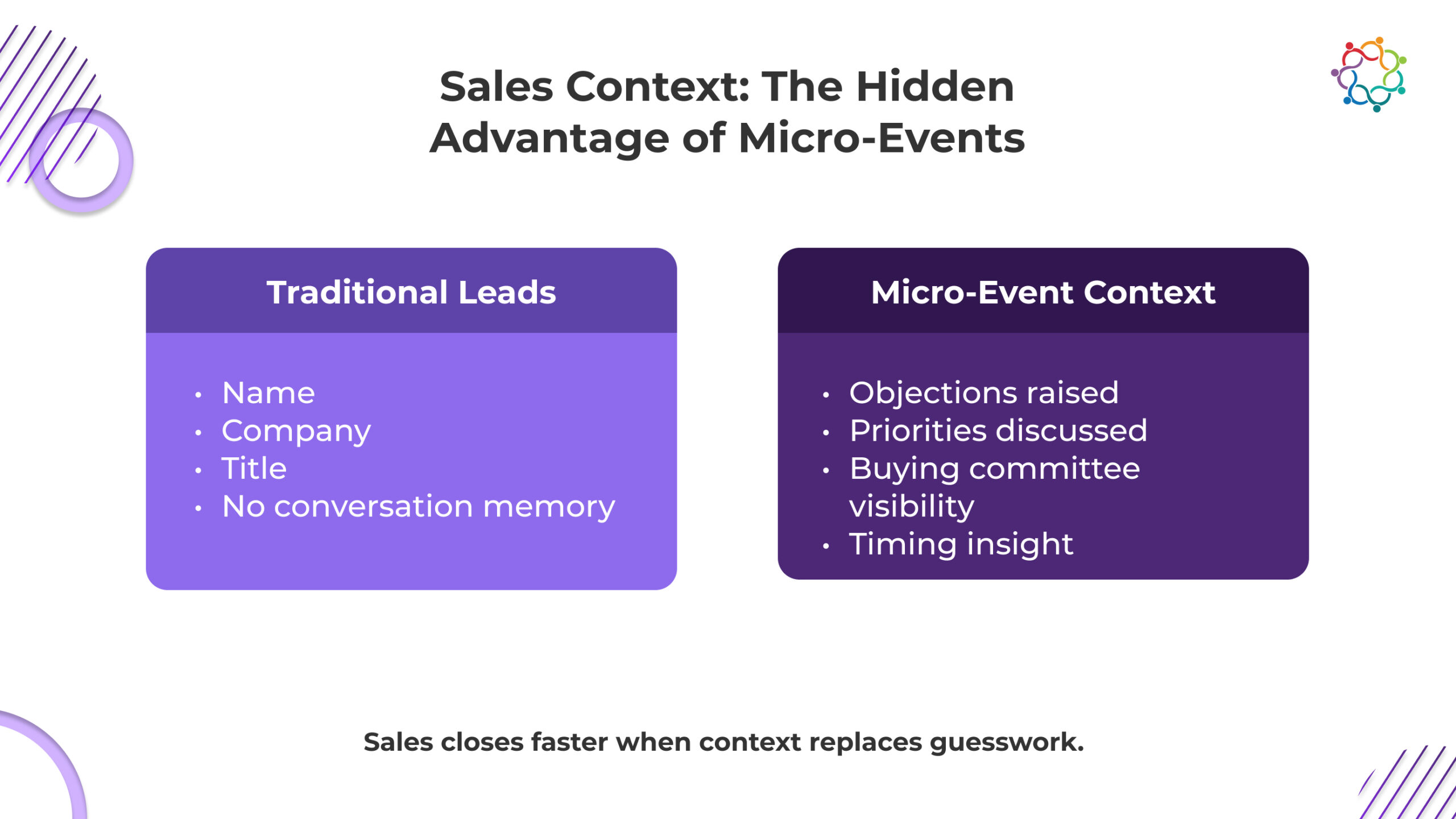

Interactions are short, interrupted, and surface-level. By the time sales follow up, critical details about priorities, objections, and timing are missing. Without context, follow-up becomes generic and ineffective.

There is usually a long gap between the event interaction and sales engagement. This delay weakens relevance and reduces the chance of converting interest into opportunity.

High lead volume masks low conversion. Sales learns to distrust these leads because the effort required to qualify them outweighs the return.

These issues are structural, not tactical, which is why sales teams consistently discount large-event outputs.

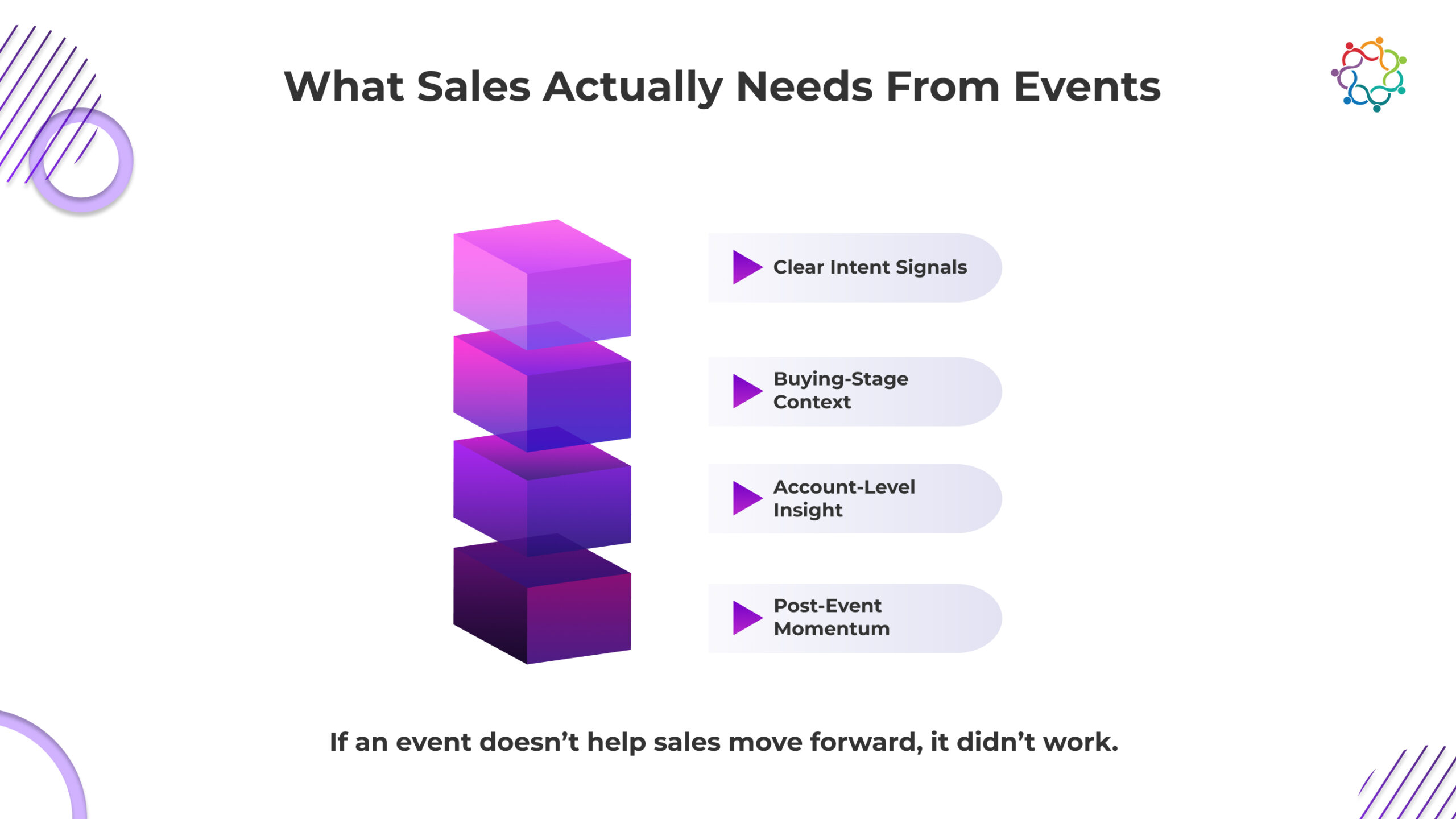

Sales need clarity. When sellers talk about valuable event outcomes, they rarely mention attendance numbers or engagement scores. They talk about understanding where an account stands, who is involved in the decision, and what problem is actively being solved. Events that deliver this context earn sales buy-in quickly.

At its core, sales evaluates events based on whether they move conversations forward. That movement can show up as urgency, openness, or a clear next step. Without these signals, even a well-attended event becomes a dead end. This is why redefining event success through a sales lens is essential.

Sales-ready events provide insight into buying stages. They focus on how concentrated genuine buying intent is within the audience. They also allow sellers to observe interactions between stakeholders for rare visibility into buying committee dynamics.

Sales criteria for valuable events

Micro-events meet these criteria by design. They create environments where conversation quality replaces attendance volume as the primary indicator of success.

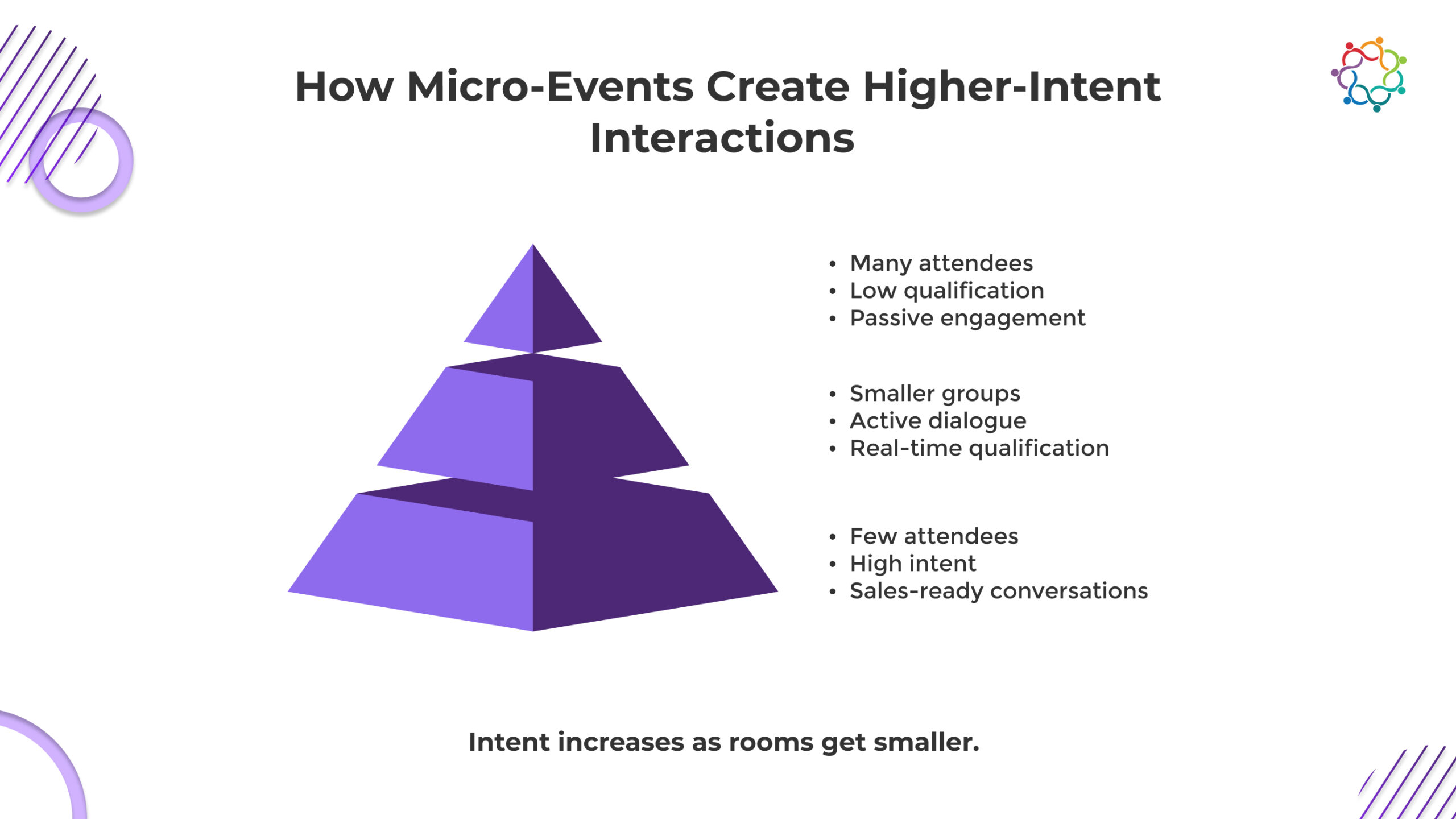

Micro-events change the quality of sales conversations because they remove the conditions that hide intent. Smaller audiences, focused topics, and active discussion create an environment where buyers speak openly about real problems. Instead of relying on post-event scoring or assumptions, sales teams can observe intent directly. This is a key reason Micro-events for B2B sales consistently outperform larger formats when conversation quality matters more than reach.

When attendance is limited, conversations become more direct and less performative. Buyers are more willing to share challenges, ask practical questions, and react honestly to ideas. Sales can quickly understand whether interest is casual or tied to an active business need.

Micro-events are built around a specific theme, role, or account set. This focus naturally discourages passive attendees. Those who join usually have a reason, which raises the overall intent level without additional qualification effort.

In micro-events, intent is expressed through questions, objections, and follow-up comments. Sales can assess readiness by listening, rather than inferring interest from clicks or downloads. This makes qualification immediate and more accurate.

Because all participants hear the same discussion, the follow-up feels connected to the experience. Sales can reference what was said, align on next steps, and continue the conversation without resetting context.

Together, these factors make intent visible, actionable, and easier for sales to trust.

(Also Read: 20 Engaging Micro Event Ideas for Corporate Teams & Leadership)

The obsession with lead volume persists because it is easy to measure. Sales outcomes are harder to predict and take longer to surface. Micro-events challenge this mindset by producing fewer leads that convert at significantly higher rates. For sales teams, this trade-off is not a compromise. It is an upgrade.

Quantity-driven models also hide inefficiency. Large volumes create the illusion of pipeline contribution while masking low conversion and long sales cycles. Micro-events expose performance more honestly by tying outcomes to real interactions.

Why do fewer leads outperform larger lists?

This is why Micro-events for B2B sales align so well with revenue goals. They optimize for effectiveness, not appearance.

Leads are only part of the value micro-events deliver. Their real advantage lies in the depth of context they generate. Sales gains insight into not just who attended, but what mattered to them. This includes objections raised, priorities discussed, and timing signals that are rarely captured elsewhere.

Context also extends beyond individuals. Micro-events often reveal buying committee dynamics in subtle ways. Another overlooked benefit is memory. Sellers remember micro-events because they are conversational and participatory. This recall strengthens relationships and makes subsequent interactions feel continuous rather than transactional.

Context advantages sales gains

This level of context is difficult to manufacture after the fact. Micro-events create it naturally.

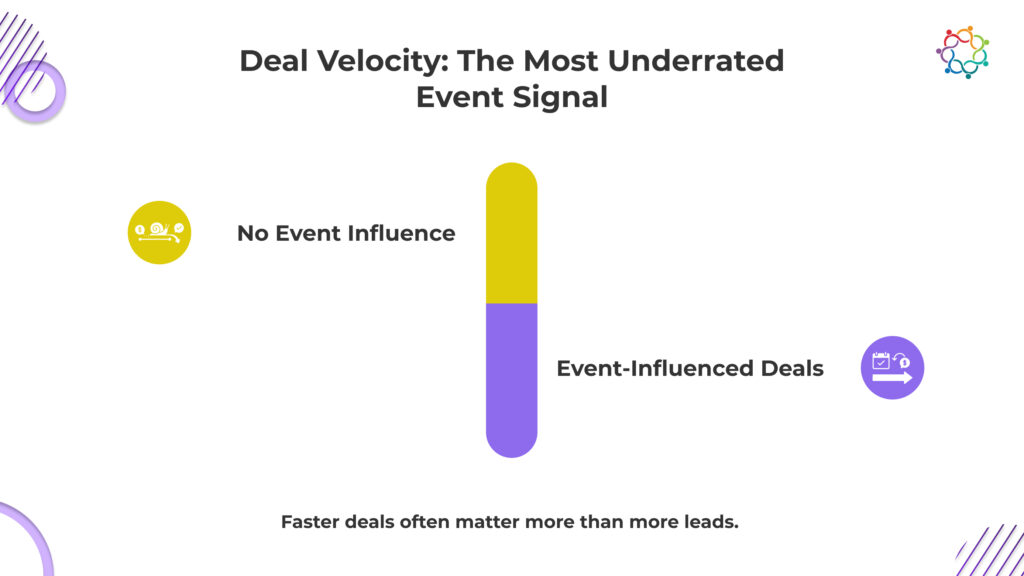

Micro-events influence the pipeline not by generating immediate deals, but by moving opportunities forward. Their impact often shows up as acceleration rather than creation. Deals progress faster because trust and understanding already exist. In some cases, dormant accounts re-engage after a focused discussion surfaces a new angle or priority.

Late-stage conversations also benefit. Micro-events can provide validation, peer perspective, or executive alignment that helps buyers gain confidence.

The key is credibility. Revenue leaders respect honesty about where and how micro-events contribute. They appreciate restraint more than inflated claims.

Where micro-events influence the pipeline

Used thoughtfully, Micro-events for B2B sales become a reliable lever for revenue movement without distorting expectations.

Micro-events only deliver sustained revenue impact when they move beyond isolated wins. A single successful session can build confidence, but sales teams need predictability, not anecdotes. Treating micro-events as a repeatable sales channel means applying the same strategic thinking used for outbound, ABM, or partner motions. When designed for consistency, Micro-events for B2B sales stop being experimental and start behaving like a dependable source of pipeline momentum.

Repeatability comes from running micro-events with a clear purpose and rhythm. When sales know what type of conversation to expect and what outcome an event is meant to support, trust builds over time. One-off events create curiosity, but consistent formats create reliance.

Not all micro-events serve the same role. Some support early-stage exploration, while others reinforce late-stage confidence. Sequencing them intentionally allows sales to use events at moments where conversation depth matters most, rather than forcing them into every stage.

Micro-events work best when the right sellers are involved from the start. Audience selection, topic focus, and participation should reflect sales coverage models, ensuring follow-up feels natural and informed.

Like outbound, micro-events require focus and discipline. Their advantage lies in shared context and live dialogue, which few other channels can replicate at the same depth.

Alignment determines whether micro-events succeed or stall. When marketing owns them in isolation, they drift toward branding. When sales disengage, follow-up weakens. Shared ownership solves both problems.

Alignment starts with definitions. Sales and marketing must agree on what success looks like, even if measurement remains directional. Pre-event involvement is equally important. Sales input on audience, topics, and goals ensures relevance. Post-event discipline then turns conversations into momentum.

The goal is not process overload. It is clarity. When both teams understand why an event exists and how it supports revenue, execution becomes simpler.

Alignment principles that should be followed:

Micro-events thrive when they sit at the intersection of sales and marketing, not on one side.

Evaluation should be strategic, not tactical. Leaders should ask whether a micro-event fits the problem they are trying to solve. Not every audience or stage benefits from small formats. Recognizing when not to use them is a sign of maturity.

Questions worth asking include whether the event produced new insight, whether sales conversations progressed, and whether follow-up felt natural. These qualitative signals often matter more than raw numbers.

Restraint improves results. Overusing micro-events or forcing them into every scenario dilutes their effectiveness. Treated selectively, they remain high-impact.

Strategic evaluation questions

Answering honestly keeps micro-events effective and credible.

Micro-events challenge long-held assumptions about scale and impact. They prove that smaller audiences can produce stronger signals, deeper trust, and faster progress. They also expose the limits of volume-based thinking that prioritizes appearance over outcomes.

This is why Micro-events for B2B sales resonate with revenue teams. They are not small marketing. They are focused sales environments where intent is visible, and context is shared. High attendance may impress, but high intent converts.

When events feel like real conversations, sales listens. And when sales listen, deals move.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

In 2026, B2B events rarely begin with an event website or a registration form. They begin with a scroll. Buyers learn about events through discussions they witness, people they trust, and ideas that speak to issues they are already concerned about. Long before a decision to register is made, LinkedIn has subtly emerged as the true front entrance to business-to-business gatherings. This shift has forced teams to rethink LinkedIn event marketing strategy as a core growth lever rather than a promotional add-on.

Event websites still matter, but they no longer create first impressions. Email still drives reminders, but it rarely builds conviction. Buyers now arrive at event pages already influenced by what they have seen on LinkedIn: who is speaking, who is engaging, and how the event shows up in public discourse. This is especially true for complex buying committees where trust must be earned collectively, not sold individually.

For field and event marketing leaders, this means ownership extends beyond promotion. LinkedIn shapes who trusts the event, who shows up informed, and who is open to conversation afterward. The platform affects downstream pipeline impact, engagement depth, and attendance quality. Using LinkedIn as a lifecycle channel recognizes the true behavior of contemporary B2B buyers and lays the groundwork for events that are more revenue-aligned and believable.

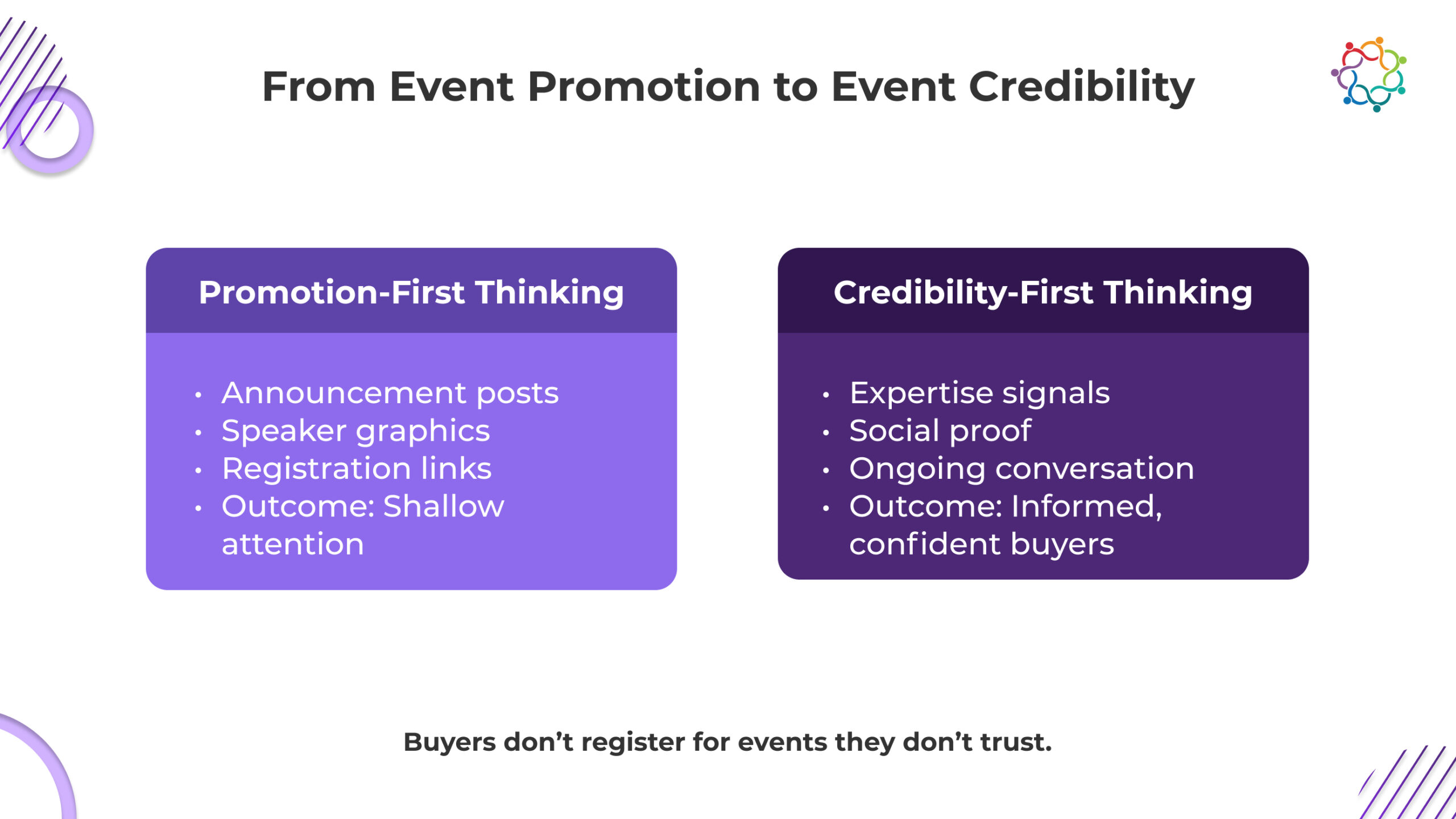

In 2026, B2B buyers do not decide to attend events based on how often they see a registration link. They decide based on whether the event feels relevant and worth their time. This shift requires teams to rethink how they use LinkedIn and reposition LinkedIn event marketing strategy around trust rather than promotion. The change can be understood through four core ideas.

Why promotion-first thinking no longer works

Announcement posts and speaker graphics create awareness but rarely conviction. Buyers are overwhelmed with event invitations and have learned to ignore anything that feels purely promotional. Without visible substance, promotion blends into background noise.

Credibility is the real driver of registration decisions

Buyers register when they believe an event will deliver insight. Credibility reduces perceived risk and increases confidence. On LinkedIn, credibility is built through thoughtful perspectives, consistent expertise, and visible seriousness around the topic.

How LinkedIn enables trust before buyers take action

LinkedIn allows buyers to observe quietly. They evaluate who is involved, how they communicate, and how others respond. Comments, discussions, and peer engagement act as social proof long before registration happens.

What changes when credibility leads the strategy

Registrations may decline when trust is prioritized, but attendance quality increases. Conversations get deeper, attendance rates rise, and follow-up after an event becomes more organic.

A strong pre-event LinkedIn presence is not about awareness. It is about setting expectations early and shaping who chooses to show up. In 2026, effective event teams use LinkedIn as a qualification layer long before registration opens.

Pre-event content should make it immediately clear who the event is for – by role, seniority, and problem context. When buyers recognize their own challenges in the language used, they self-select in. When they don’t, they quietly opt out. This reduces poor-fit registrations and increases confidence among ideal attendees.

Insight-led posts act as the first filter. Opinions, trade-offs, and practical observations attract buyers who already understand the problem space and disengage casual interest. That filtering improves attendance quality before a landing page is ever visited.

LinkedIn also sets expectations around depth. Serious discussion signals that the event will prioritize substance over surface-level promotion. This leads to better show-up rates, stronger on-site engagement, and more productive conversations.

The objective is not volume. It is predictable, relevant attendance that marketing and sales teams can actually use.

In B2B events, speakers often matter more than the hosting brand. Buyers trust people before logos, especially when evaluating whether an event will deliver meaningful insight. This makes speaker presence on LinkedIn one of the most powerful drivers of registration confidence within any LinkedIn event marketing strategy.

When speakers actively share their perspectives on the event topic, they create borrowed trust. Their existing credibility transfers to the event, which reduces perceived risk for potential attendees. This trust cannot be manufactured through corporate promotion alone. It must be visible, authentic, and sustained over time.

Activating speaker voices means encouraging them to engage in genuine conversation around the themes they will address. Their posts, comments, and interactions serve as social proof that the event will offer depth rather than surface-level discussion.

Peer validation amplifies this effect. When other respected professionals comment thoughtfully or reference the speaker’s ideas, it creates a visible credibility loop.

This approach positions speakers as educators, not promoters, and makes the event feel like a natural extension of an ongoing professional conversation.

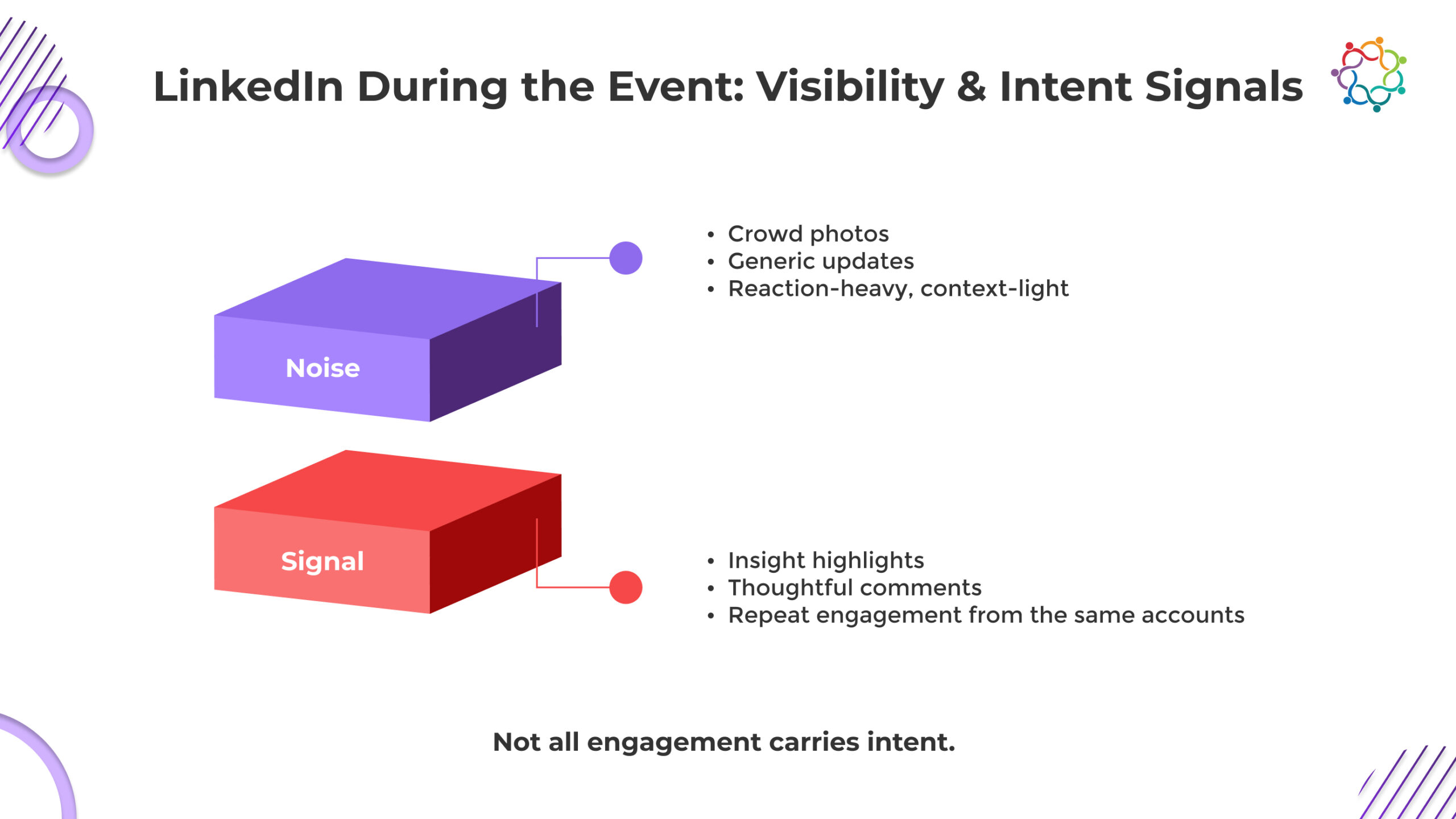

Live posting during events is often misunderstood. Many teams default to high-frequency updates that add noise without insight. In contrast, a mature LinkedIn event marketing strategy treats live visibility as signal amplification, not real-time documentation.

During the event, LinkedIn should surface moments that matter, not everything that happens. Buyers following along are not looking for proof that the event exists. They want evidence that meaningful conversations are taking place. This requires restraint and intentionality.

Effective live content highlights ideas, not attendance. A single post capturing a compelling insight can create more impact than dozens of generic updates. This also respects the experience of attendees, allowing them to stay present rather than feeling pressured to perform online.

Refrain from flooding feeds with images, hashtags, or vague enthusiasm. Posting too much can lower engagement quality and weaken credibility.

The impression that the event itself prioritizes content over spectacle is strengthened when LinkedIn content from the event seems deliberate and measured.

LinkedIn engagement during an event provides valuable context when interpreted carefully. Reactions and comments are not direct attribution metrics. Instead, they act as directional signals that support relationships and sales intelligence. A realistic LinkedIn event marketing strategy focuses on patterns instead of numbers.

Who engages matters more than how many engage. Comments from relevant roles, repeat interactions from the same accounts, and thoughtful responses indicate genuine interest.

A detailed comment often reflects deeper engagement than a reaction. When the same individuals engage multiple times across event-related posts, it suggests sustained interest rather than casual browsing. Sales teams can use this information responsibly by treating it as context, not triggers.

This approach avoids intrusive outreach and supports more informed dialogue. LinkedIn becomes a shared layer that helps marketing and sales understand engagement depth without overclaiming causation.

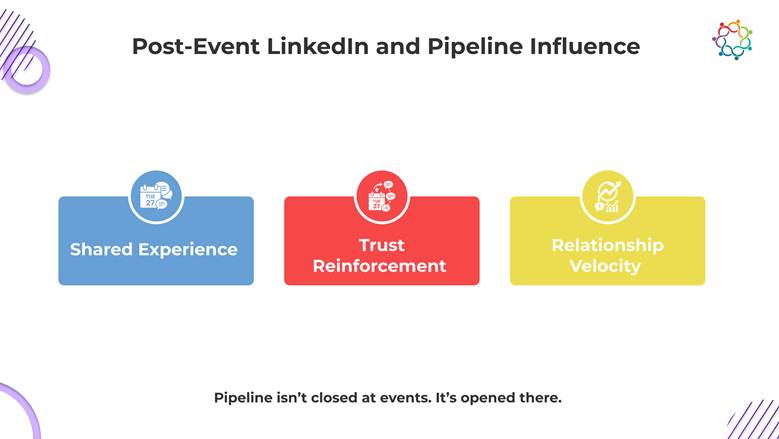

For many B2B teams, the event ends when the booth is packed up or the venue clears. In reality, this is when LinkedIn’s influence becomes most valuable. Post-event activity is where relationships mature, and pipeline influence takes shape. A strong LinkedIn event marketing strategy prioritizes continuation over recap.

Generic event summaries rarely move deals forward. What matters is extending the conversations that began during the event. This includes sharing follow-up insights, responding to public comments, and opening private dialogues grounded in shared context.

Post-event content should support follow-up. Buyers are more receptive when discussions feel like a natural next step rather than a sales pitch. Sales teams can reference event themes, shared moments, or public exchanges to reopen the conversations with relevance and respect.

When trust continues to build after the event, pipeline influence becomes more efficient. LinkedIn supports this by keeping conversations contextual and connected to real interactions.

Events rarely create immediate pipeline in B2B. Their value lies in influence over time, not attribution. LinkedIn is what allows that influence to persist across long sales cycles, multiple stakeholders, and delayed decisions.

Post-event LinkedIn activity keeps key ideas visible during gaps in the sales process. When buyers continue to encounter familiar themes, speakers, or discussions in their feed, momentum is reinforced without forcing premature outreach.

This visibility also reactivates dormant or passive accounts. Engagement with event-related content signals renewed curiosity without a formal hand-raise, creating a natural and contextual re-entry point for sales.

Most importantly, LinkedIn extends event influence across the buying committee. Different stakeholders engage at different moments, allowing trust and relevance to build asynchronously rather than all at once.

Attribution oversimplifies this reality. Influence reflects it. Teams that measure progression instead of credit align event strategy with how revenue actually forms.

Misalignment between marketing and sales often undermines event ROI. Depending on how teams collaborate, LinkedIn can either widen this gap or help close it. A shared LinkedIn event marketing strategy creates clarity around roles, signals, and behavior.

Sales teams need visibility into what marketing activity means, not just what it produces. This includes understanding which engagement signals matter and how to use them without resorting to spammy outreach. Marketing, in turn, benefits from knowing what context sales finds useful.

When marketing and sales operate from the same assumptions, LinkedIn becomes a trust-building environment rather than a lead-harvesting tool.

In 2026, LinkedIn should be considered a strategic environment. Teams planning events must decide when LinkedIn deserves priority and when other channels may suffice. Asking the right questions matters more than choosing the right tools.

Before planning an event, teams should reflect on whether the target audience actively builds trust on LinkedIn. For senior, committee-based buyers, the answer is often yes. In these cases, depth of presence matters more than frequency of posting.

Answering these honestly helps teams design events that align with how buyers discover, evaluate, and engage in 2026.

(Also Read: Mastering Event Promotion on LinkedIn: A Comprehensive Guide)

B2B events no longer compete on agendas alone. They compete on trust. In 2026, that trust is built in public, through visible expertise, credible voices, and sustained conversation. LinkedIn is where this process unfolds.

Attendance follows belief. Buyers show up when they are confident the event will respect their time and intelligence. That confidence is shaped long before registration, influenced by what they see and who they trust.

A modern LinkedIn event marketing strategy treats the platform as an end-to-end growth environment. It supports discovery, credibility, engagement, and pipeline influence without forcing artificial metrics or shortcuts.

Events that win in 2026 earn relevance before they start, reinforce it during the experience, and extend it long after. LinkedIn is not a distribution channel in this context. It is where B2B events prove they matter.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

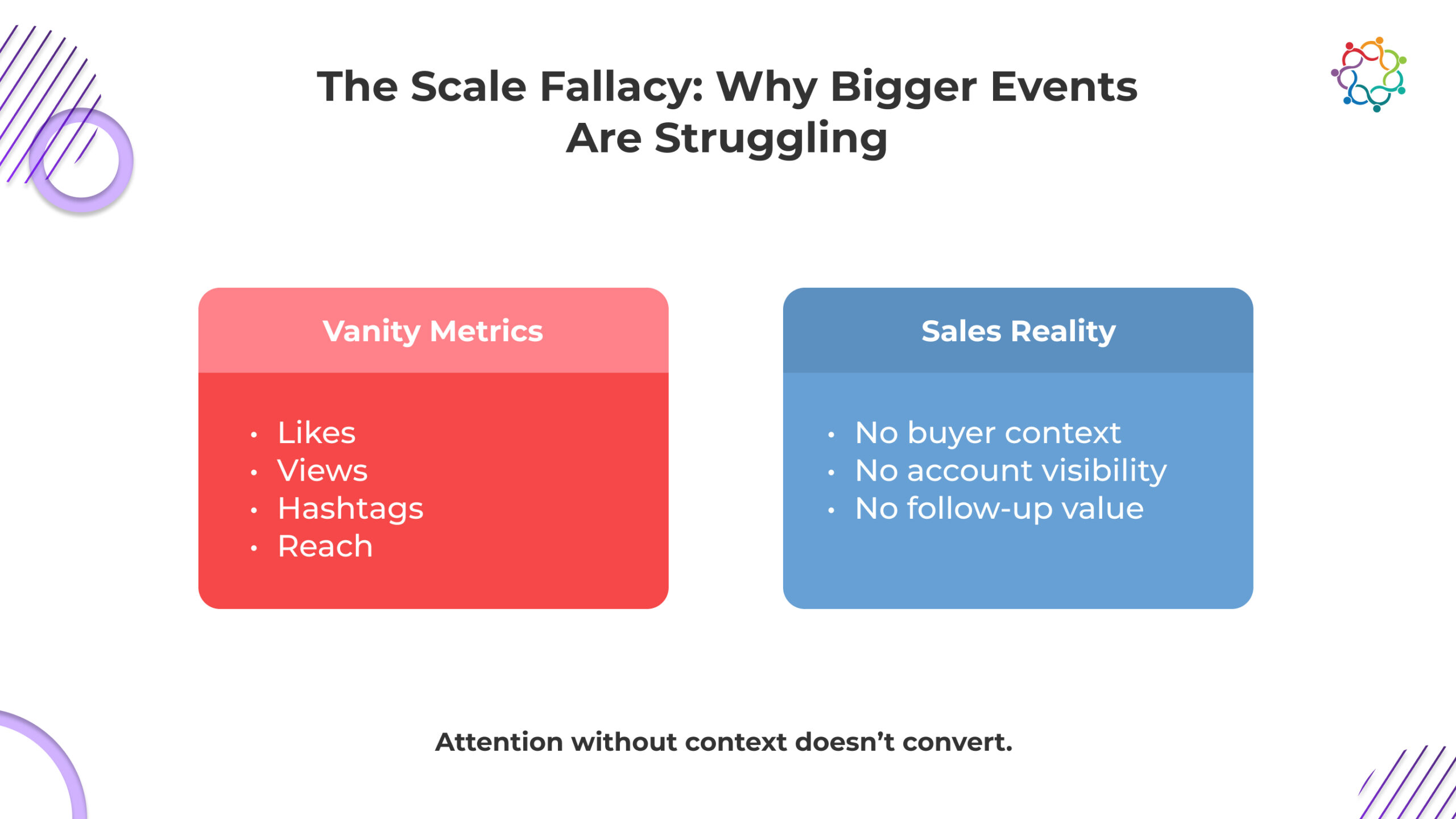

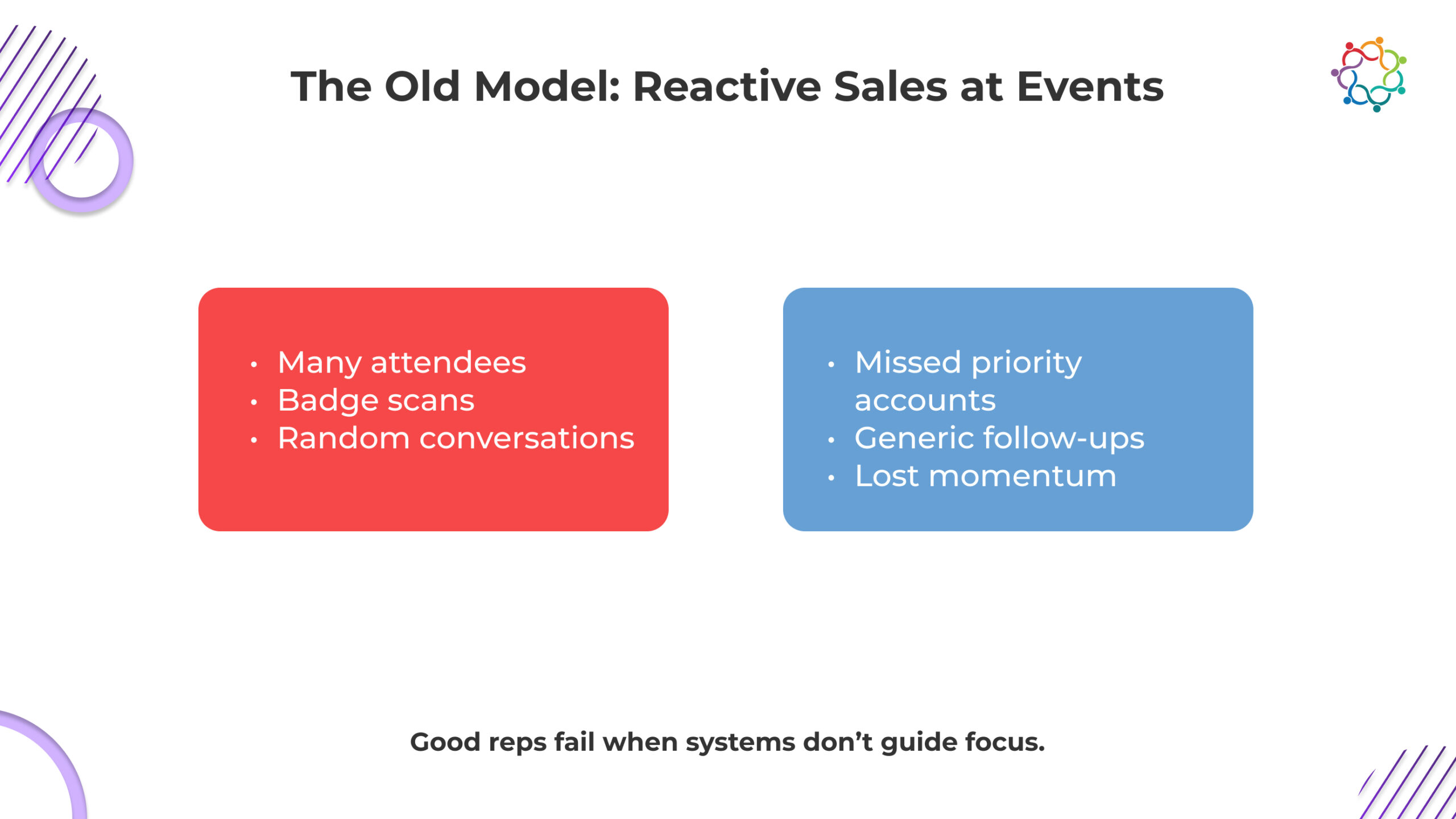

Trade shows create intense bursts of activity. Booths are staffed, calendars are full, and marketing teams post constantly across LinkedIn, X, and Instagram. Photos go up. Hashtags trend within small circles. Engagement numbers climb. On the surface, it looks like momentum. Inside the organization, however, sales teams rarely touch this content once the show ends.

The disconnect is not about effort. It is about purpose. Most event social media is built to prove presence rather than to support revenue conversations. Posts are designed to show that the brand was there, that the booth looked busy, and that something exciting happened. None of that helps a sales rep decide who to follow up with or how to continue a conversation that started on the show floor.

Sales leaders do not ask how many impressions a post received. They ask which accounts showed intent, which problems came up repeatedly, and which conversations are worth prioritizing. When social media does not answer those questions, it becomes invisible to revenue teams.

This is where a trade show social media strategy needs reframing. The goal is not to amplify noise during the event. The goal is to capture context, signal relevance, and extend real conversations beyond the booth. When social content is created with follow-up in mind, it becomes a sales asset instead of a marketing report.

This blog explains how to make that shift without turning social media into a tactical checklist. It focuses on intent, conversation capture, and post-event momentum because that is where pipeline influence actually shows up.

Impressions, likes, and views dominate post-event reports because they are easy to collect and easy to explain. Unfortunately, they are also easy to misinterpret. High engagement during a trade show often reflects algorithmic amplification rather than buying interest. A crowded hashtag attracts peers, competitors, vendors, and people who were never close to the booth.

The problem is not that these metrics are useless. The problem is that they are incomplete. They describe attention without context. Sales teams cannot act on a lead if they do not know who engaged, why they engaged, or whether that engagement connects to a real business problem.

Trade shows amplify this issue because social platforms reward volume and immediacy. Fast posts with generic captions often perform better than thoughtful content. That performance creates a false sense of success, even when none of the engagement maps to target accounts.

Common vanity metrics fall short because they fail to answer sales questions such as who showed repeat interest or which roles interacted with problem-specific content.

Sales teams need signals, not applause. Without intent signaling, social media becomes performative. A trade show social media strategy built on vanity metrics may look active, but it leaves no usable trail for follow-up.

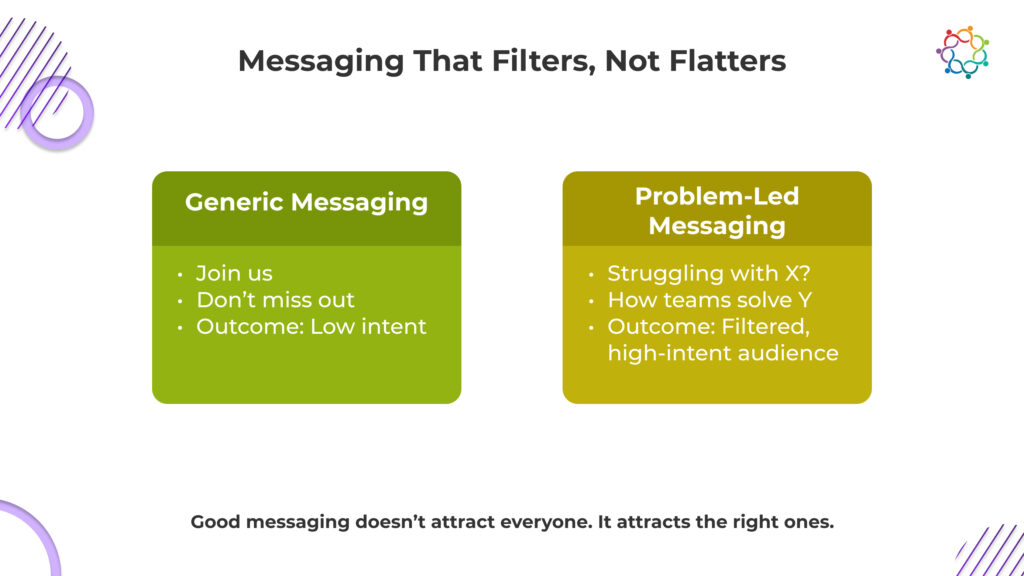

Social media at trade shows is often treated as a live broadcast channel. Posts go out quickly, reactions are tracked, and activity peaks during the event. What is usually missing is intent. When social content is not designed to support sales conversations, it becomes disconnected from pipeline impact.

To be effective, trade show social media must shift from promotion to enablement. Its role is not to narrate the event, but to capture substance and create continuity between the booth and post-event follow-up. This requires a clear understanding of what sales teams actually need once the show ends.

Social content should reflect the questions prospects ask, the objections they raise, and the problems they describe. When posts mirror real discussions happening at the booth, they become reference points that sales can use later to re-open conversations with context.

Trade show content should demonstrate how your team thinks, not just where it shows up. Specific insights, frameworks, or trade-offs signal relevance to the right audience and naturally filter out casual viewers who are not a fit.

Every post should be usable after the event. If a sales rep cannot share it in a follow-up message to reinforce a conversation, it likely does not capture meaningful value.

Social media should help continue discussions that started in person. Thoughtful content allows prospects to engage again, ask questions, or share internally, keeping momentum alive after the booth interaction ends.

When social media serves these functions, it stops being an awareness channel and starts supporting pipeline movement. This is the foundation of a revenue-aligned trade show social media strategy.

(Also Read: How To Craft Engaging Social Media Content for Your Events)

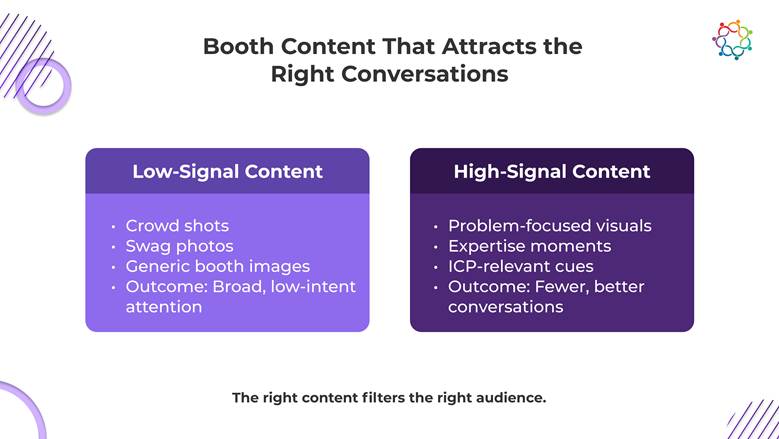

Most booth content optimizes for visibility. Crowded aisles and smiling teams signal activity, not expertise. For sales alignment, content must make one thing immediately clear: who the booth is for and what problem it exists to solve.

Effective booth content emphasizes moments of explanation, not celebration. A focused discussion around a real use case communicates relevance far better than a packed booth with no context. Short video clips work when they reveal how your team thinks, not how busy they are.

Visual cues act as filters. The language on screens, the diagrams shared, and even the props used signal whether someone belongs in the conversation. When content reflects specific challenges and trade-offs, it attracts people who recognize them and quietly repels those who do not.

Strong booth content is designed to start conversations, not document presence. Posts that invite responses from a defined role or industry segment create a natural quality signal. When the right people engage, that interaction is more valuable than raw reach.

High-performing booth content typically includes:

The goal is not more traffic. It is better conversations.

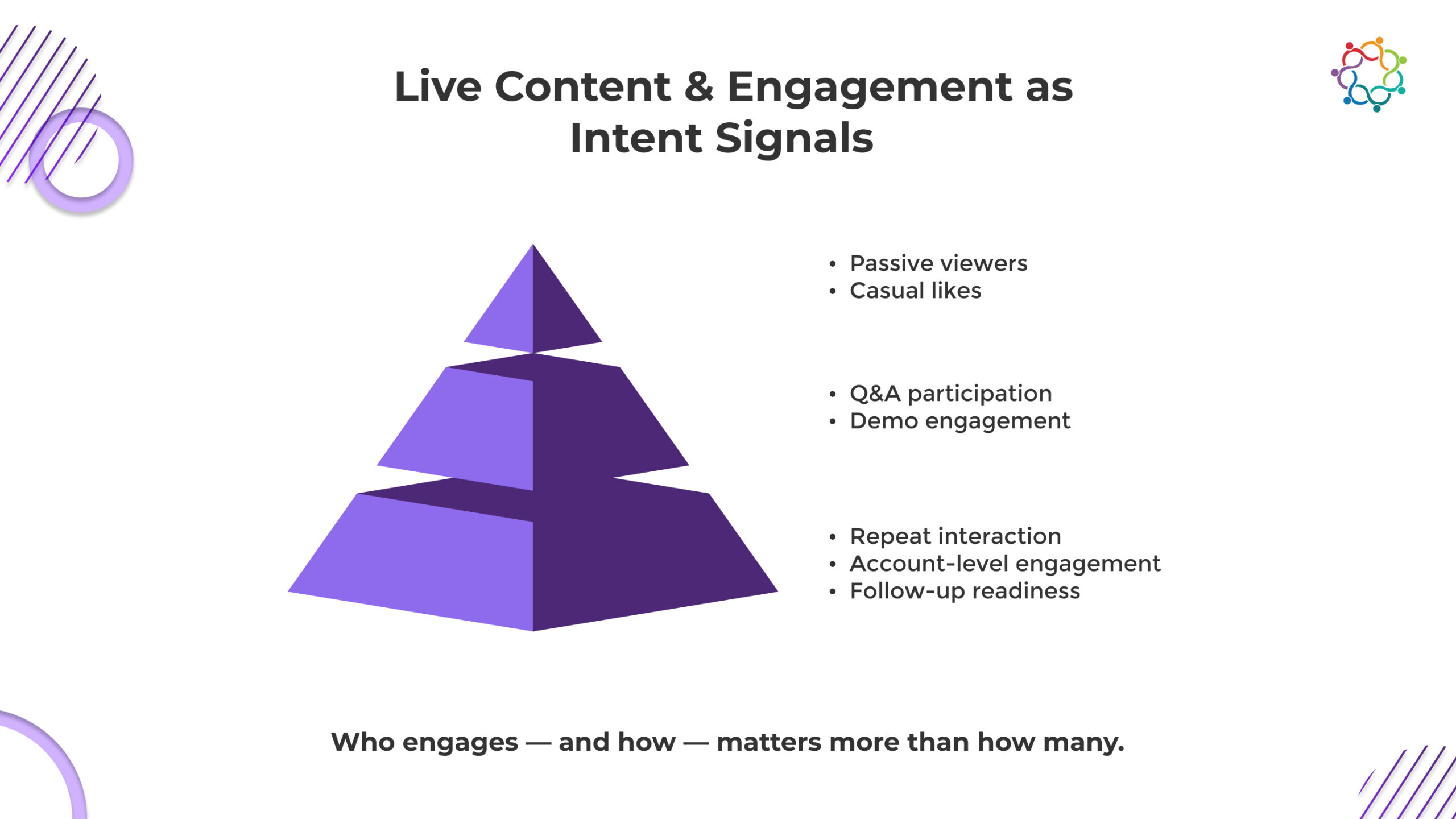

From a sales perspective, this changes follow-up priority immediately. Reps should ignore generic booth scans and focus on conversations triggered by specific content; people who commented on a framework, asked about a failed approach, or referenced a use case shown at the booth. Those signals indicate problem recognition, not casual interest. Everything else can wait.

Most live trade show content prioritizes energy over usefulness. Walk-throughs and quick interviews attract attention, but they rarely surface intent. To matter commercially, live content must function as a filter, not entertainment.

Live formats should intentionally narrow the audience. When sessions focus on real problems, constraints, and trade-offs, only relevant viewers stay engaged. That self-selection is where intent begins.

Expert-led Q&A works when it targets specific ICP challenges. The questions asked and how they are framed reveal urgency, problem awareness, and buying maturity. These are stronger signals than view counts.

Demos should be anchored in real use cases, not feature tours. When viewers recognize their own scenario, they stay. When they don’t, they leave. That drop-off is a feature, not a failure.

Depth is the filter. Assuming a baseline level of domain knowledge discourages casual viewers and surfaces serious prospects. Fewer, better viewers produce more sales value than broad visibility.

When live content filters effectively, social selling becomes practical rather than performative.

For sales teams, live engagement should determine who gets called first and how the conversation starts. Reps should prioritize viewers who asked questions, stayed through deeper segments, or attended multiple sessions, and lead with the same problem framing used in the live content. Viewers who dropped in briefly or reacted passively should not receive immediate outreach or demo-heavy follow-ups.

Social engagement at trade shows is often misread. Likes and views do not equal intent. Used carefully, however, engagement can add context that improves follow-up quality.

The key is restraint. Social signals should support sales activity, not replace it.

Who engages matters more than how many. A single interaction from a relevant decision-maker outweighs dozens of passive reactions.

Patterns matter more than moments. Repeat engagement, participation across related topics, or follow-up questions signal deeper interest and help sales prioritize outreach.

Role context matters. Engagement from practitioners, influencers, or decision-makers requires different responses and different next steps.

Social engagement should be treated as supporting evidence, never attribution. Its value lies in sharpening conversations, not claiming revenue impact.

Used correctly, social signals improve event lead quality without inflating expectations. They reinforce a trade show social media strategy built on intent, not vanity.

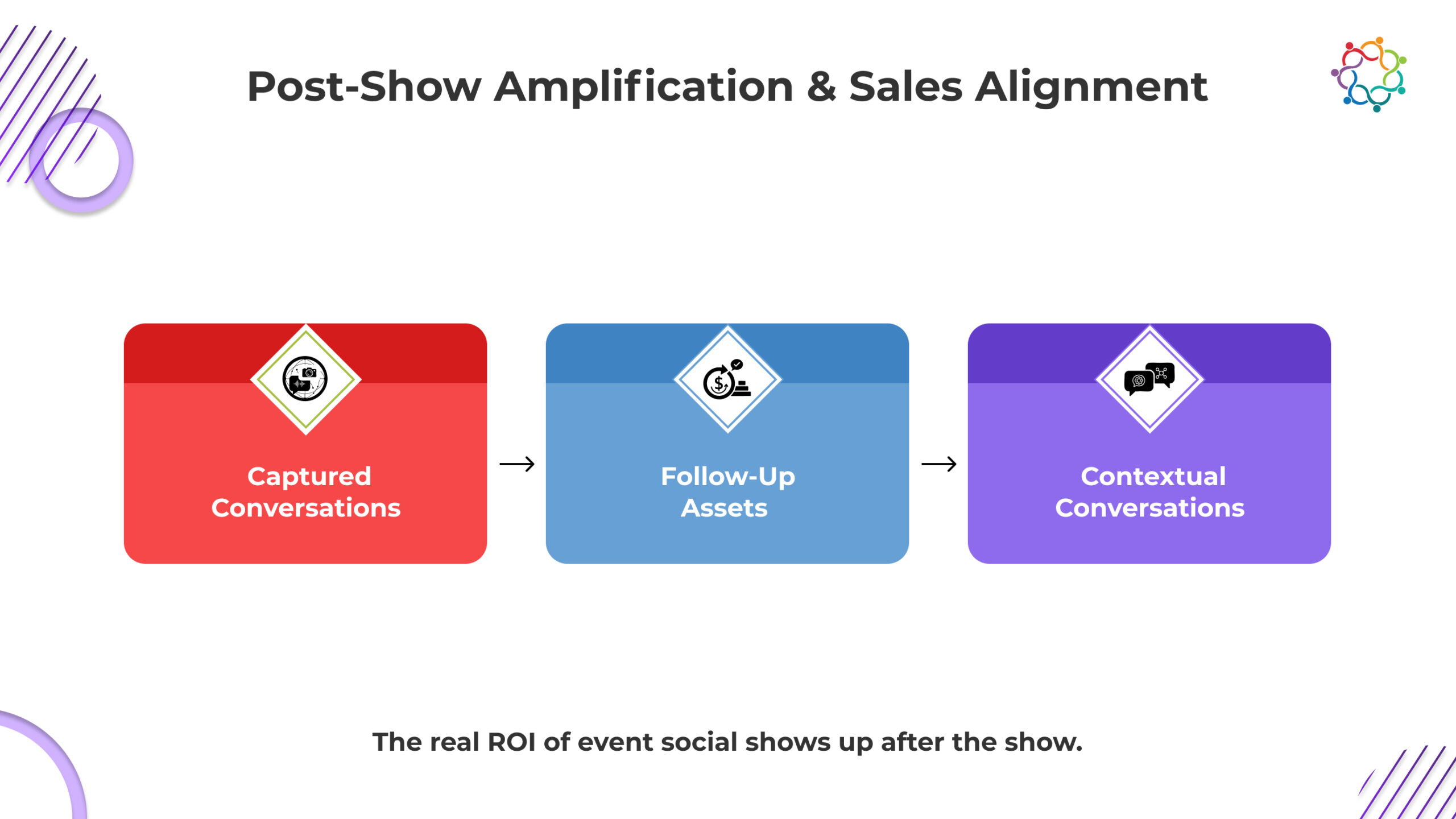

The most important phase of trade show social media begins after the event ends. This is when sales follow up, momentum fades or accelerates, and pipeline outcomes are decided. Content created during the show should be optimized for this moment.

Post-show amplification is not about recapping what happened. It is about reinforcing why conversations started and helping sales continue them with relevance.

Short clips, visuals, or insights captured during the event should be reused in follow-up messages. These assets help prospects recall discussions and reconnect quickly.

Sales teams need content that fits naturally into one-to-one communication. Lightweight, focused assets outperform long recaps or highlight reels.

Post-event content should align with themes discussed at the booth. This continuity strengthens credibility and keeps the dialogue moving forward.

Timely post-show content keeps your brand present while interest is still high. This sustained visibility supports deal acceleration rather than starting from zero.

This is where event content amplification drives results. By focusing on post-event momentum, teams realize the revenue potential embedded in a disciplined trade show social media strategy.

Misalignment between social, events, and sales teams often shows up during follow-up. Marketing hands over a folder of content. Sales does not know what to use or when. The result is generic outreach that ignores context.

Sales teams need clarity, not volume. They want to know which content connects to which conversation and why it matters. Marketing can help by packaging content around themes rather than channels.

Shared visibility is critical. When sales understands what was posted, who engaged, and what questions surfaced, follow-up becomes more relevant. This requires simple processes, not complex tools.

Avoiding content dumps means curating selectively. A few well-chosen assets tied to specific problems outperform broad recaps.

This operational clarity ensures that social media efforts translate into better conversations, strengthening the overall trade show social media strategy.

Rethinking trade show social media starts before the event. Teams should ask strategic questions about purpose and audience rather than platforms and formats. This mindset shift leads to restraint, which often improves results.

Choosing fewer content moments forces clarity. When every post must justify how it helps sales follow up, unnecessary content falls away. What remains is more focused and more useful.

Measurement should evolve as well. Instead of reporting impressions alone, teams should evaluate how often content is used in follow-up and whether it advances conversations.

By prioritizing usefulness over volume, teams build a sustainable approach. Restraint is not a limitation. It is a focus. This perspective makes sure that the ROI strategies remain aligned with revenue goals.

Trade show social media is not about being everywhere or posting constantly. It is about relevance and continuity. When content captures real conversations and supports intelligent follow-up, it earns a place in the revenue process.

If sales cannot use the content, it did not work. If engagement does not reveal intent, it is just noise. The true measure of success appears after the booths are packed up and the follow-up begins.

A disciplined approach turns social media into a conversation extender rather than an event highlight reel. That shift is what makes social media matter in the context of trade shows.

Trade show social media should fuel sales conversations, not distract from them.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

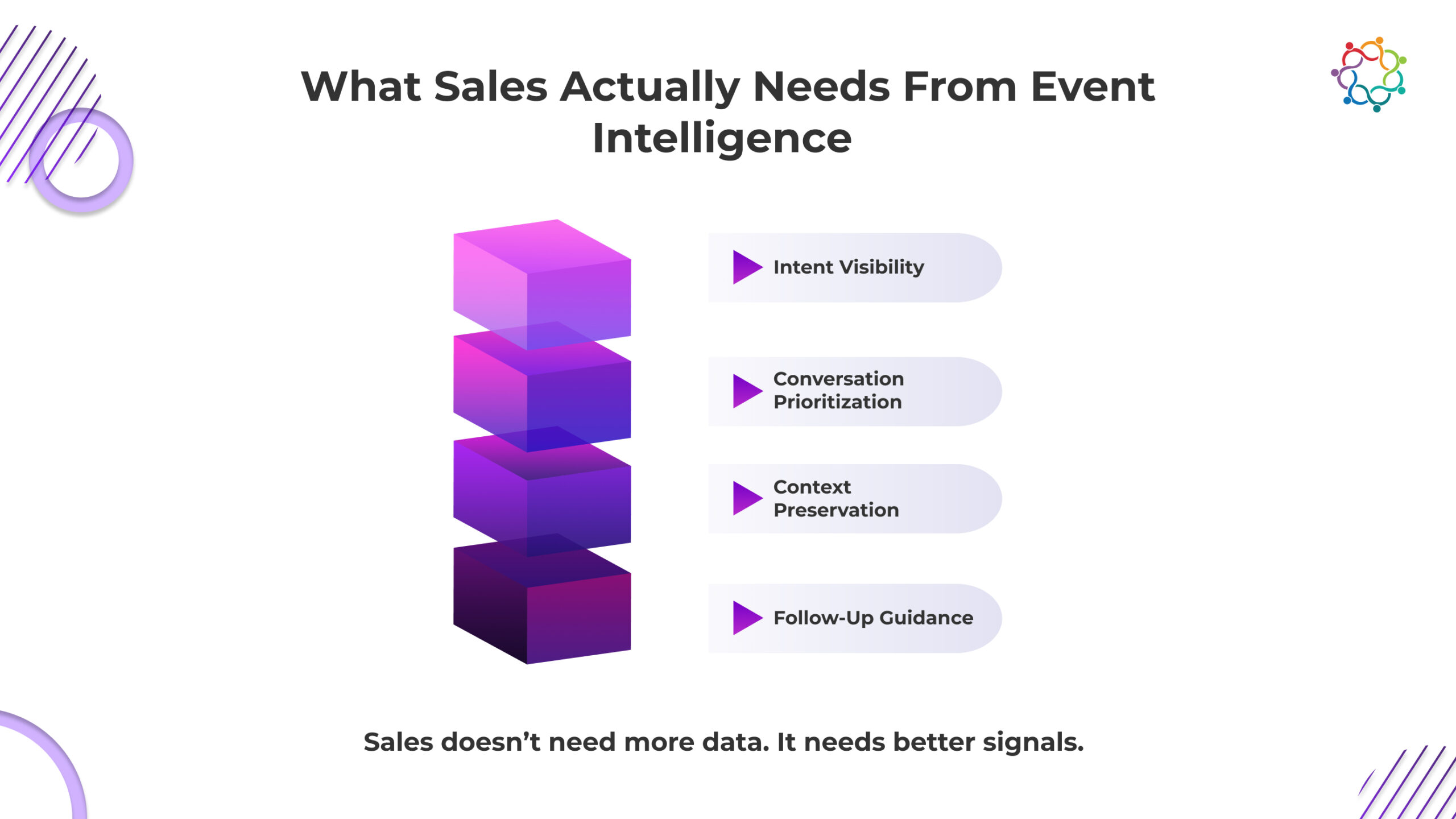

Even though B2B organizations invest a lot of money in events, sales discussions at these gatherings are still surprisingly poorly managed. Despite the fact that booths are crowded, calendars are full, and scans are taken, revenue teams frequently go without knowing which discussions were truly important. When choosing who to approach, sales representatives rely on intuition, location, and good fortune. Recall and disorganized notes are necessary for follow-up. Important context fades quickly once the event ends.

Events generate intense, high-signal interactions, but most teams treat those moments as temporary activity rather than durable sales intelligence. As a result, high-intent attendees are missed, strong buying signals are delayed, and follow-ups arrive too late or feel generic. None of this is due to poor effort. It is a structural gap in how conversations are identified, prioritized, and remembered.

This is where AI for event sales conversations begins to matter, not as automation or novelty, but as intelligence. The real opportunity is not replacing representatives. It is helping sales teams focus their time and attention on the conversations that are most likely to influence the pipeline. When used thoughtfully, AI can shift events from chaotic activity to intentional sales moments- before, during, and after the event.

Most event sales motions are still reactive. Reps greet whoever is in front of them, scan badges, exchange pleasantries, and move on. At scale, this looks productive while hiding serious inefficiencies.

The old model follows familiar patterns:

Even strong salespeople struggle here. They cannot see which attendees are actively evaluating solutions, which accounts are already in the pipeline, or which conversations deserve deeper qualification. In the moment, everything feels equally urgent, so prioritization disappears.

After the event, the damage compounds. Reps return to full inboxes and open deals. Event leads become just another list. Context fades, timing slips, and promising conversations go cold. Leadership then questions event ROI, mistaking a reactive system for an ineffective event.

Sales teams don’t fail at events due to lack of effort or tools. They fail because they lack timely, usable intelligence. Before introducing AI, it’s worth defining what intelligence actually needs to do for sales.

First, sales needs visibility into intent. Signals around buying interest, account relevance, and role alignment help reps separate casual curiosity from active evaluation.

Second, sales needs real-time prioritization cues. During events, decisions are made quickly. Intelligence should surface simple signals that guide where time is spent without disrupting natural conversations.

Third, sales needs shared context. Key conversation details must be captured and accessible across teams so follow-up reflects what actually happened, not assumptions.

Finally, sales needs guidance on follow-up. Knowing who to contact first — and why — turns outreach from reactive to intentional.

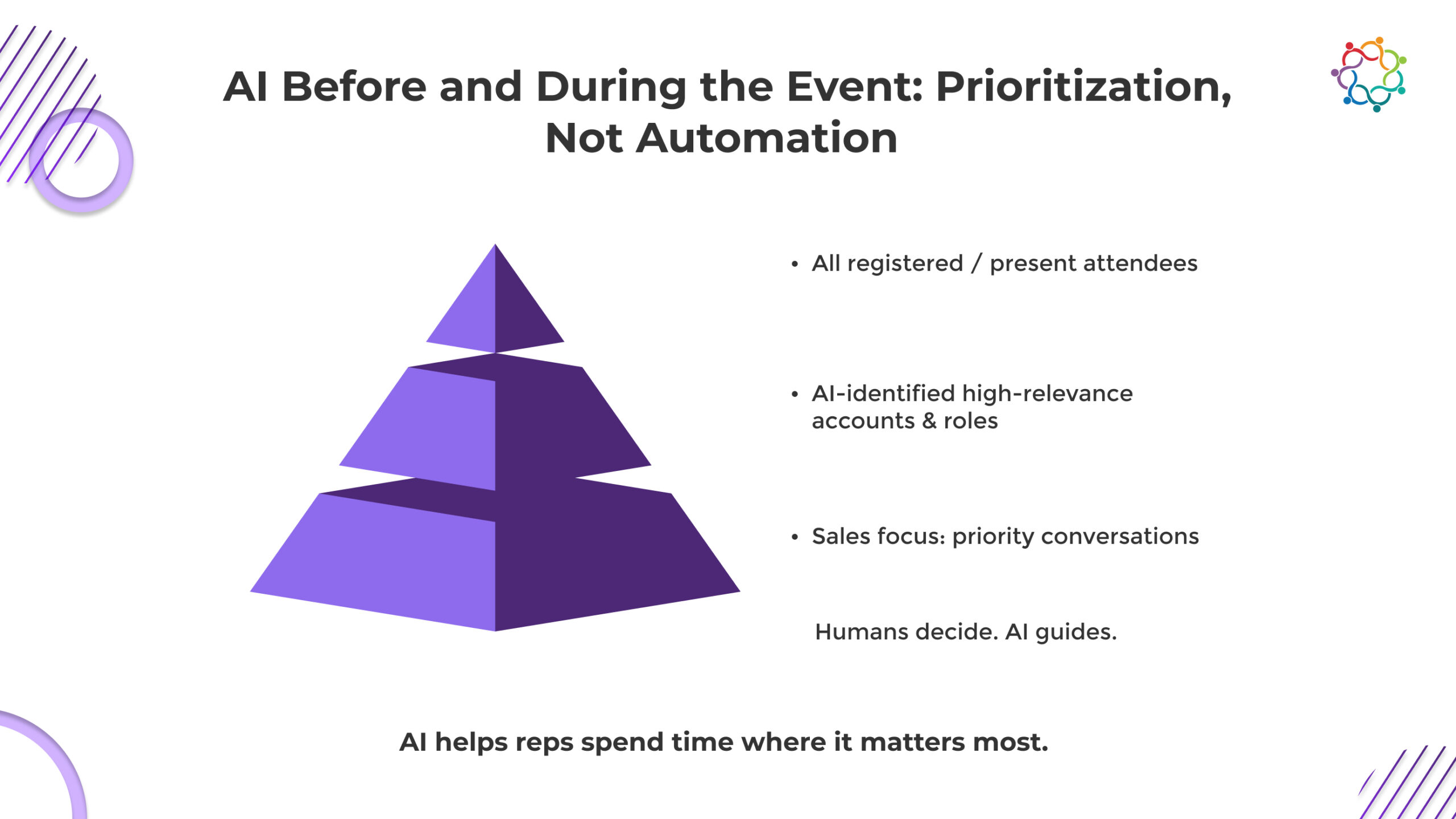

Preparation is where event outcomes are quietly decided. Yet most sales teams arrive with little more than a generic attendee list and a rough staffing plan. AI changes this by introducing intent-based preparation.

Before the event, AI can analyze registration behavior, session selections, past engagement, and account-level data to highlight attendees who are more likely to be in-market. It can also match roles and responsibilities to relevant offerings, helping reps understand why a conversation might matter before it starts.

Which accounts deserve proactive outreach?

How to allocate senior reps versus general coverage.

Which meetings should be scheduled in advance?

Where booth staffing should be concentrated.

By reducing randomness, AI for event sales conversations turns preparation into a competitive advantage. Reps arrive knowing which conversations to seek out and which questions to ask. Marketing and sales leaders gain confidence that coverage aligns with revenue priorities, not just foot traffic.

Importantly, this does not eliminate spontaneity. It simply ensures that high-intent opportunities are not left to chance. When preparation is guided by signals rather than assumptions, events begin with focus instead of hope.

Prioritization is most important and most difficult to uphold on the event floor. Reps have to decide quickly where to spend their time as several discussions vie for their attention. AI helps make these choices without taking over.

AI can display priority guests in real time throughout the event according to pre-identified purpose, account importance, or live interaction. This helps representatives in determining whether to engage in a more in-depth discussion, consult a professional, or immediately arrange for follow-up. The goal is not interruption, but informed choice.

Better allocation of senior sales time.

More intentional qualification conversations.

Reduced missed opportunities during peak moments.

Used correctly, AI for event sales conversations feels empowering. Reps remain fully in control of the interaction. AI simply provides context that would otherwise be invisible. This balance preserves the human nature of selling while improving focus.

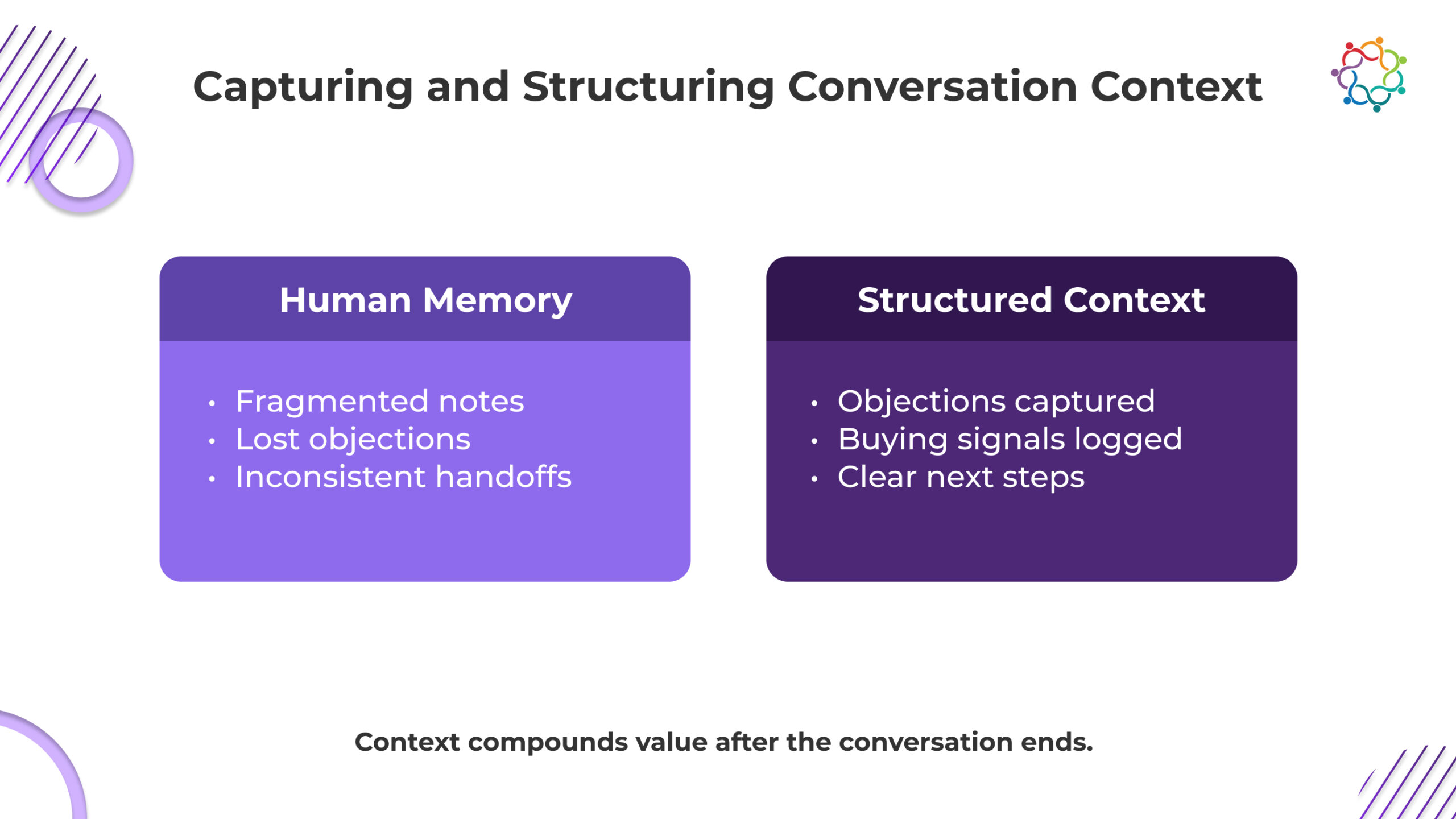

Conversations about events are frequently insightful yet memory-fragile. Representatives switch topics fast, and important details are soon forgotten after the encounter. Recording all that was said is not the goal of capturing context. In order for discussions to continue with clarity, relevance, and momentum, it is important to preserve what is essential. AI introduces a more reliable way to structure and retain this context without interrupting the human flow of selling.

Dependence on rushed post-event explanations or handwritten notes results in gaps and inconsistencies. AI assists in structuring important discussion components, such as needs, objections, and priorities, into a dependable framework that salespeople can refer to.

When the conversation context is structured, it does not stay locked with a single rep. AI enables intent, urgency, and next steps to be visible across sales, marketing, and account teams, reducing misalignment after the event.

Structured context allows follow-up messages to reference real discussions instead of generic themes. This improves relevance and timing without requiring reps to spend extra time reconstructing conversations.

A smaller number of well-documented conversations drives more revenue impact than a large volume of low-context leads. By prioritizing clarity over quantity, AI strengthens sales effectiveness and continuity beyond the event.

Post-event follow-up is where many teams lose momentum. Emails are delayed, messages are generic, and relevance fades. AI helps by aligning timing and content with actual intent.

After the event, AI can prioritize follow-ups based on conversation signals, engagement level, and account status. This makes sure that high-intent prospects are contacted first and with appropriate context. Personalization is grounded in what was discussed, not in templated phrases.

Intent-based sequencing rather than batch blasts.

Messaging tied to specific needs or questions.

Clear recommendations on when to re-engage.

When used responsibly, AI for event sales conversations supports reps without replacing them. The rep still writes the message, chooses the tone, and builds the relationship. AI simply ensures that effort is focused where it matters most.

The result is a follow-up that feels timely, relevant, and human. Prospects recognize that they were heard, not processed.

Events rarely create instant deals, which makes attribution misleading. AI helps clarify influence without overclaiming credit by connecting conversation signals to pipeline movement.

This includes identifying patterns such as:

This is not about ownership. It is about contribution. When sales leaders can see how event conversations support deal progression, confidence in event investment increases. Decisions become informed rather than defensive.

When influence is visible, events stop being judged by lead volume alone and start being evaluated by their role in revenue motion.

(Also Read: The Ultimate Guide to Integrating Sales Enablement and Event Marketing)

Cross-team alignment often breaks down around events. Sales questions lead quality, marketing questions follow-up, and RevOps struggles to reconcile data. AI insights can reduce this friction if shared thoughtfully.

Alignment starts with shared definitions of intent. When teams agree on what signals matter, AI outputs become trusted rather than debated. Visibility should empower, not police. The goal is clarity, not micromanagement.

Clear ownership of how insights are used.

Guardrails that prevent data overload.

Transparency into how signals are generated.

Importantly, this does not require new dashboards for every team. Sometimes the most effective alignment comes from simpler, shared views and consistent language.

When AI insights are framed as support rather than control, trust grows. Sales, marketing, and RevOps move from defending their metrics to collaborating on outcomes.

AI adds credibility to event sales strategies only when its boundaries are clearly understood. Algorithms cannot direct every aspect of a sales conversation. AI is useful when it complements judgment rather than when it takes its place. This section explains the situations in which human decision-making is still crucial and the ones in which AI actually improves event sales talks.

Prioritizing attention and time

AI excels at analyzing multiple signals at once to help reps decide which conversations deserve deeper focus, especially in busy event environments.

Identifying patterns humans miss

By connecting engagement behavior, account data, and historical outcomes, AI surfaces intent patterns that are difficult to spot manually.

Preserving and structuring context

AI helps retain key details from conversations so insights are not lost after the event, enabling more relevant and timely follow-up.

Improving consistency across teams

It creates a shared understanding of intent and conversation quality, reducing variation caused by individual memory or subjective interpretation.

Replacing human judgment in conversations

AI cannot read emotional cues, adapt tone in real time, or navigate complex interpersonal dynamics that define effective selling.

Handling ambiguity without guidance

When data is incomplete or situations shift unexpectedly, AI lacks the situational awareness that experienced reps bring.

Building trust and rapport

Relationships are formed through listening, empathy, and credibility, all of which remain distinctly human skills.

Making ethical or strategic trade-offs

Decisions involving sensitivity, long-term partnerships, or brand trust require human oversight and accountability.

Events have always been about conversations. Technology should serve that truth, not distract from it. The real promise of AI for event sales conversations is not efficiency for its own sake. It is focus, relevance, and continuity.

Sales teams have more clarity when AI is used strategically before, during, and after an event. They are aware of when to follow up, who to talk to, and how to set priorities. As attention improves, so do conversations.

When people apply greater intelligence, not when they give up their judgment, the best results are achieved. Things become more deliberate and less chaotic. Sales discussions become a strategic asset rather than a blind spot.

No automation replaces a great rep. But better intelligence helps great reps do their best work.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

Enterprise attention has become one of the scarcest resources in 2026. Buyers are better informed, calendars are tighter, and decision-making groups are larger and harder to coordinate. In this environment, the assumption that event success depends on scale is starting to fracture. Leadership teams are increasingly questioning why large, expensive events generate so much activity but so little clarity.

For years, event strategy was built around visibility. More attendees meant more relevance. Bigger rooms implied greater impact. Yet enterprise teams now see a growing disconnect between attendance numbers and business outcomes. Sales conversations are rushed. Engagement diffuses across too many interactions. Feedback exists, but rarely points to a clear next step.

What is emerging instead is a shift toward deliberate, smaller formats that prioritize relevance over reach. These are not scaled-down flagship events or budget-driven compromises. They are intentional investments designed to improve alignment, learning, and decision movement. The rise of micro-events reflects a broader change in how enterprise teams think about engagement, attention, and return on effort.

Success is being redefined. With the right participants in the room, fewer voices often lead to better discussions, faster insight, and greater confidence in decisions. This blog explores why that shift is happening and why it matters now.

Large events are not failing because teams lack ambition or effort. They struggle because scale introduces friction that enterprise organizations can no longer ignore. As events grow, attention fragments, conversations compress, and the conditions required for meaningful engagement quietly disappear.

At large events, attendees are constantly pulled in multiple directions – parallel sessions, back-to-back meetings, networking zones, side conversations. Focus becomes transactional. Conversations that require context, continuity, and time are cut short or deferred indefinitely. The event may look busy, but cognitive bandwidth is thin.

This fragmentation is especially damaging in enterprise buying environments. Complex deals depend on trust, nuance, and sustained dialogue across multiple stakeholders. One-to-many formats struggle to accommodate layered concerns or align different decision-makers in a coordinated way. The result is surface-level interaction where depth is required most.

Scale also creates a signal problem. High activity produces impressive volume – booth scans, session check-ins, survey responses, but very little clarity. Teams leave with dashboards full of numbers that are difficult to interpret and harder to act on. Intent, readiness, and next steps remain ambiguous despite the apparent success of the event.

All of this comes at a rising cost. Venue size, travel, production, and sponsorship expenses escalate quickly, while attribution remains murky. When outcomes are unclear, leadership stops asking how to improve the event and starts questioning whether the scale itself is worth defending.

The core issue isn’t execution. It’s the assumption that size guarantees impact. That assumption no longer aligns with how enterprise buyers make decisions or how revenue teams are expected to operate today.

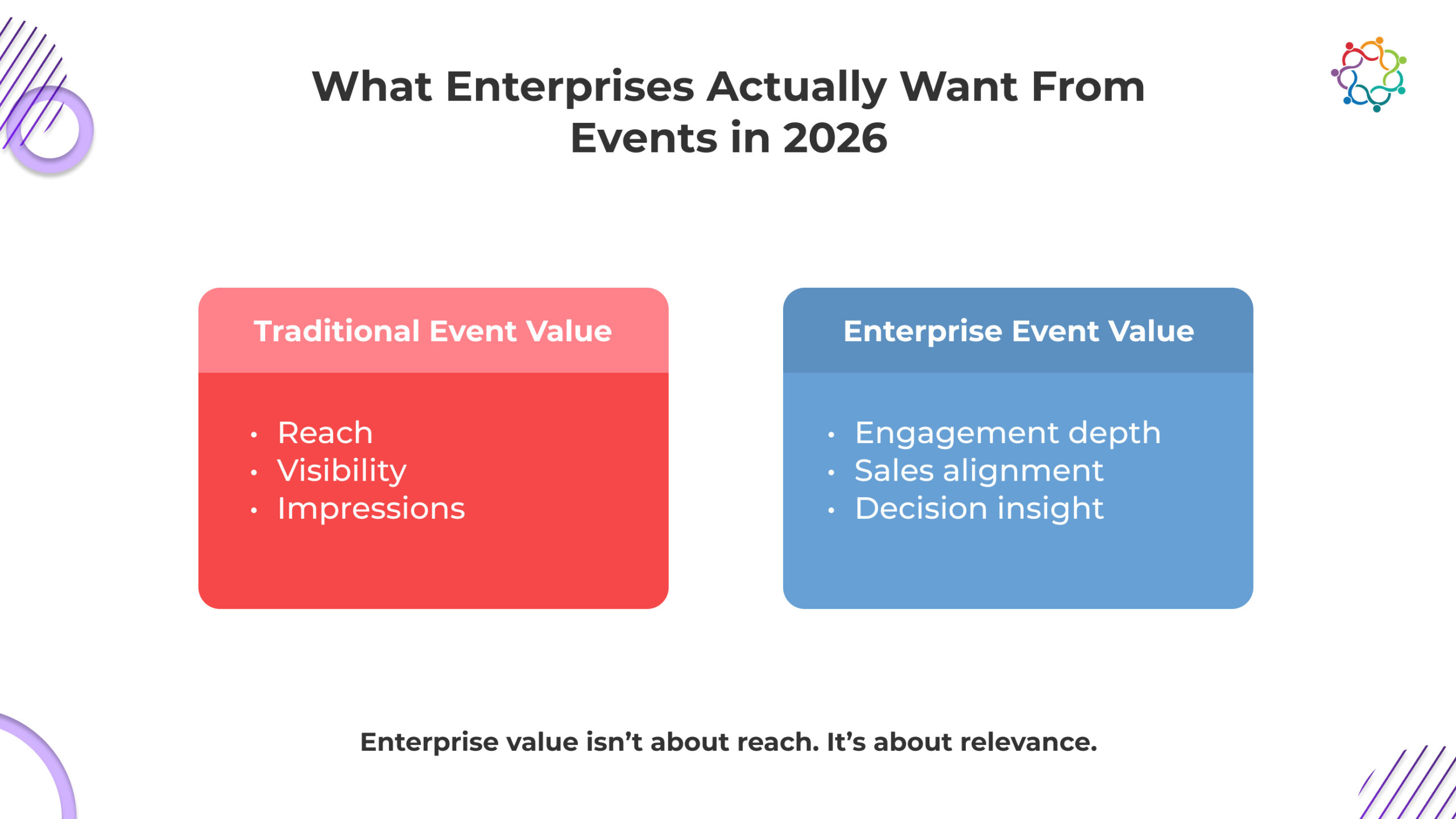

Enterprise teams are no longer satisfied with events that simply generate awareness. They expect events to move decisions forward. In 2026, value is defined less by how many people attend and more by what changes as a result of those interactions. What enterprises are actively looking for includes:

Teams want conversations that surface real priorities, objections, and constraints, not surface-level engagement that ends when the event does.

Events should help align multiple decision-makers in the same context, reducing the need for weeks of follow-up to reconstruct intent and consensus.

Leadership expects quick, actionable feedback on messaging, positioning, and product relevance. Events must generate signals that inform decisions without long delays.

Events deliver value when sales teams can engage directly, learn from buyers, and influence outcomes without competing for attention in crowded environments.

These expectations naturally favor smaller, more focused formats. They align with enterprise priorities around engagement depth, audience precision, and revenue clarity. Micro-events are not a passing trend. They are a direct response to what enterprises actually need from events today.

Micro-events are often misunderstood as simply smaller versions of traditional events. In reality, they represent a different design philosophy altogether. Their effectiveness comes from intentional constraints that force clarity and focus.

At the heart of this approach is curation. Attendance is defined by relevance, not availability. Audiences are selected based on clear criteria such as role, account fit, or buying stage. This makes sure that conversations are immediately meaningful and aligned.

Another defining feature is structure. Micro-events prioritize interaction over passive consumption. Sessions are designed to encourage discussion, debate, and shared problem-solving. This creates space for deeper engagement and more honest exchanges.

Key characteristics typically include:

This is where the micro-events strategy becomes critical. Success depends on intentional design choices that align audience, content, and outcomes. These events are not about filling seats. They are about creating environments where the right conversations can happen efficiently and effectively.

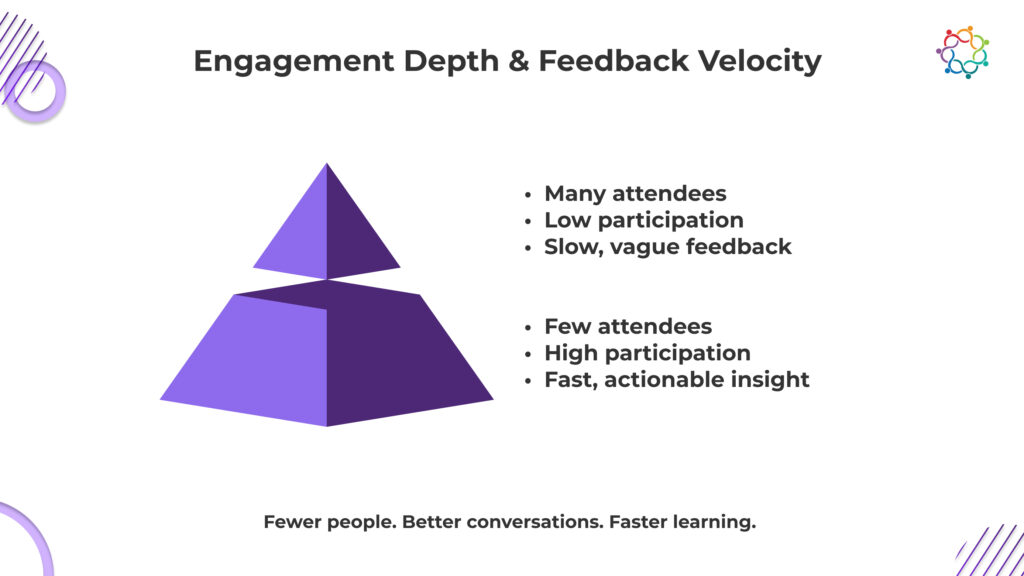

As the room gets smaller, engagement changes fundamentally. Distractions drop and accountability rises. Participants are no longer anonymous attendees; their presence carries weight. Contribution is expected, not optional. Events shift from being attendance-driven to participation-driven, which is where meaningful value begins.

Smaller groups reduce social hiding. People speak more freely, ask sharper questions, and listen more closely because the conversation depends on them. Dialogue becomes two-way rather than performative.

This environment also changes the quality of discussion. Without the pressure of stagecraft or promotion, buyers are more willing to surface real constraints, competing priorities, and unresolved concerns. Conversations last longer and move beyond surface agreement into nuance.

When multiple stakeholders are present, buying dynamics become visible. Alignment, hesitation, and influence can be observed directly instead of inferred later. For sales teams, this visibility is often more instructive than hundreds of disconnected interactions. Depth replaces guesswork with understanding.

One of the most practical advantages of micro-events is how quickly teams learn from them. In smaller settings, feedback is immediate, contextual, and specific. Teams don’t need to interpret meaning from aggregated surveys or stitched-together notes.

Because discussions are more candid, patterns emerge faster. Objections repeat. Interests cluster. Priorities sharpen. This accelerates learning not just for sales, but across marketing, product, and leadership teams.

The timing matters as much as the content. Feedback is often processed while the experience is still unfolding, sometimes in the same room. Assumptions can be tested and reframed immediately, rather than weeks later in a post-event report.

This shortens learning cycles and increases confidence in decisions. In contrast, high-volume environments often delay insight until momentum is already lost.

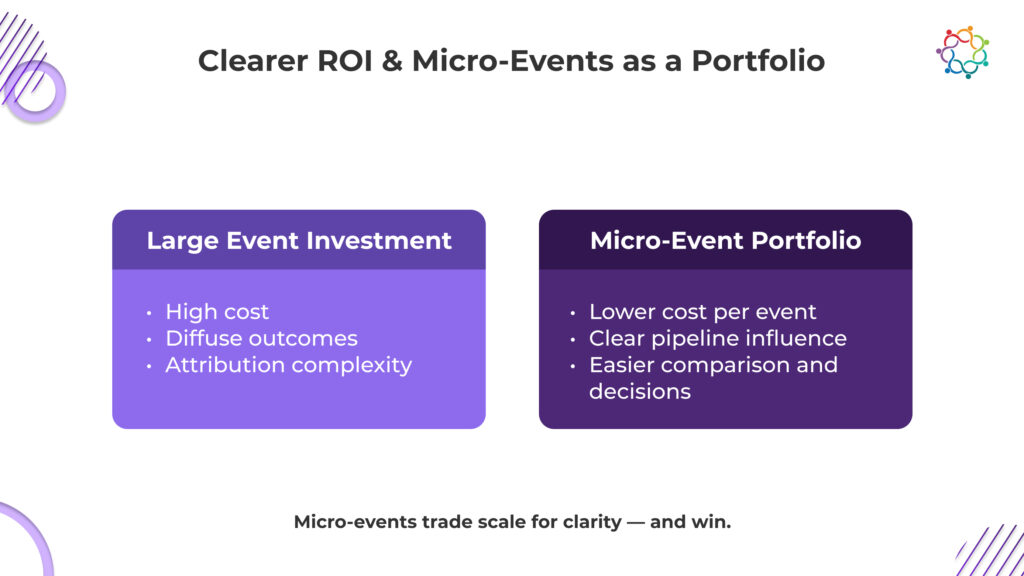

Large events generate numerous touchpoints that are difficult to isolate and even harder to interpret. Measurement has always been challenging at scale, particularly when goals are diffuse. Micro-events reduce this complexity by narrowing focus and variables.

When attendance is intentional and objectives are explicit, it becomes easier to connect participation to pipeline movement or deal progression. Teams can identify which conversations mattered and why, leading to more honest evaluations of impact.

While micro-events may cost more per attendee, they often cost less per meaningful interaction. This reframes ROI discussions away from volume justification and toward outcome credibility.

Measurement becomes less about defending activity and more about understanding influence. The goal isn’t perfect attribution. It’s realistic clarity. When noise drops, insight rises, and decisions improve as a result.

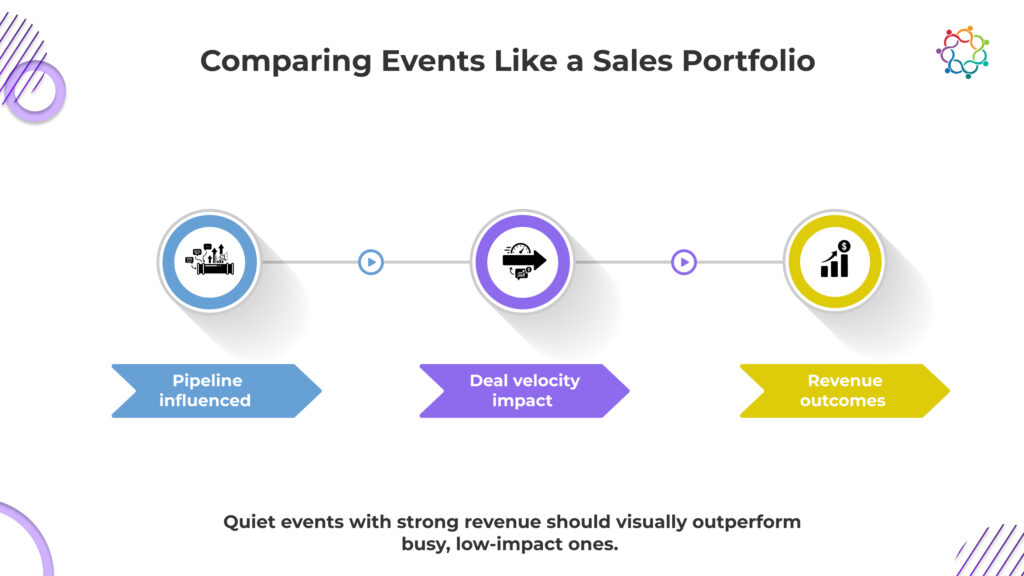

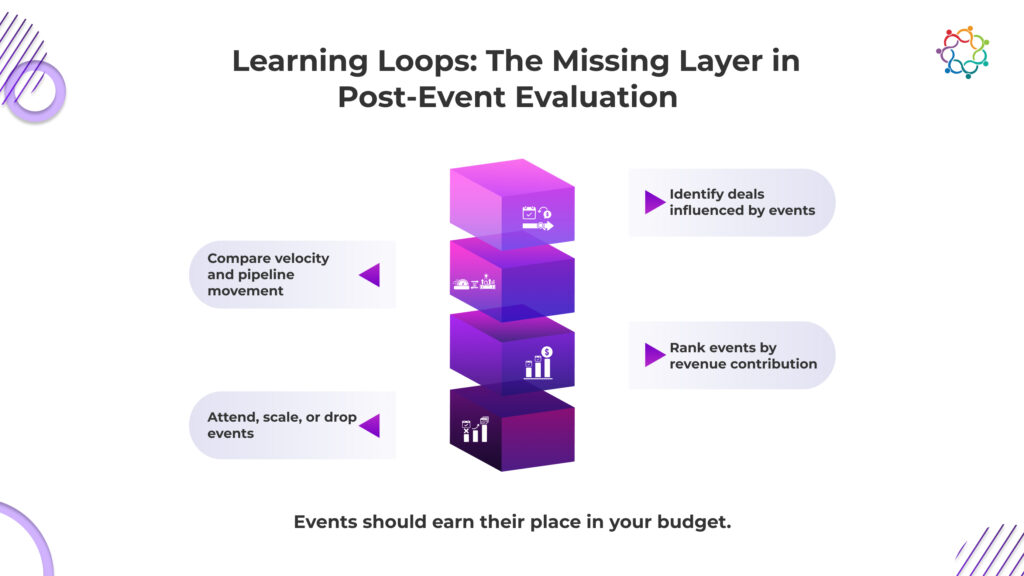

Treating micro-events as isolated experiments limits their value. Enterprises that see the strongest results think in terms of portfolios rather than individual events. Each micro-event serves a specific purpose within a broader engagement strategy.

Different formats can support different stages of the pipeline. Some may focus on early-stage education, while others facilitate late-stage alignment or customer expansion. Sequencing these events creates momentum and continuity.

This portfolio mindset also allows teams to compare investments more objectively. Micro-events and large events become options within a spectrum, evaluated based on expected outcomes rather than tradition.

Opportunity cost is an important consideration. Defaulting to scale can crowd out more precise investments. A thoughtful micro-events strategy confirms that resources are allocated where they can create the most impact.

By viewing micro-events as a strategic asset rather than a tactic, enterprises elevate their role from experimental to essential.

Adopting micro-events is not just a change in event format. It requires a fundamental shift in how teams define success. For years, visibility and scale were treated as a replacement for impact. In 2026, that equation no longer holds. Enterprise teams need to prioritize clarity, alignment, and decision support over reach. That shift begins before planning starts, with more deliberate thinking about purpose and outcomes.

Not every event needs to be widely seen to be valuable. Teams should focus on whether an event advances understanding, alignment, or momentum rather than how many people it attracts.

Leaders must decide when focused engagement delivers more value than broad exposure. Certain audiences and buying stages benefit far more from smaller, curated interactions.

Micro-events only work when sales and marketing agree on objectives, audience selection, and success criteria. Misalignment undermines focus and outcomes.

Fewer, well-aligned events often outperform a packed calendar. Discipline in what not to do is as important as execution.

When micro-events are intentional, aligned, and measured honestly, they reward teams with insight, confidence, and clearer decision-making.

As buyer behavior shifts, enterprise events are being forced to evolve with it. Attention is no longer granted by scale or visibility. It is constrained, selective, and governed by relevance. In this environment, precision consistently outperforms presence.

Micro-event strategies are not a reaction to budget pressure, nor are they a tactical compromise. For teams that understand the cost of distraction, they represent a structural advantage. By prioritizing engagement depth, feedback quality, and ROI clarity, organizations can design events that actively move decisions forward rather than merely support awareness.

A well-executed micro-event strategy accepts a hard truth: under the right conditions, fewer participants produce stronger outcomes. Being everywhere will not earn attention in 2026. Presence no longer earns attention. Precision does. Attention is earned in focused, high-intent settings where conversations shape decisions.

Paid promotion has become the default response when event registrations slow down. Budgets are unlocked, campaigns are rushed live, and performance is judged almost entirely on registration volume. When results disappoint, the conclusion is predictable. Paid ads do not work for events. This assumption misses the real issue. The problem is rarely the channel. It is the expectation placed on it.

In 2026, event advertising sits at the intersection of demand generation, brand positioning, and pipeline accountability. Treating it as a last-minute attendance booster ignores how paid media actually functions. Ads amplify whatever strategy already exists. If the event has unclear value, weak targeting, or mismatched goals, paid spend simply scales those flaws faster.

Many teams also collapse two very different objectives into one metric. Driving attendance is not the same as driving outcomes. High registration numbers can coexist with low engagement, poor follow-up, and zero pipeline influence. When success is defined narrowly, paid campaigns are set up to fail before they start.

This article reframes paid promotion as an investment decision. The goal is not to run more ads, but to run them with intent, discipline, and a realistic view of ROI.

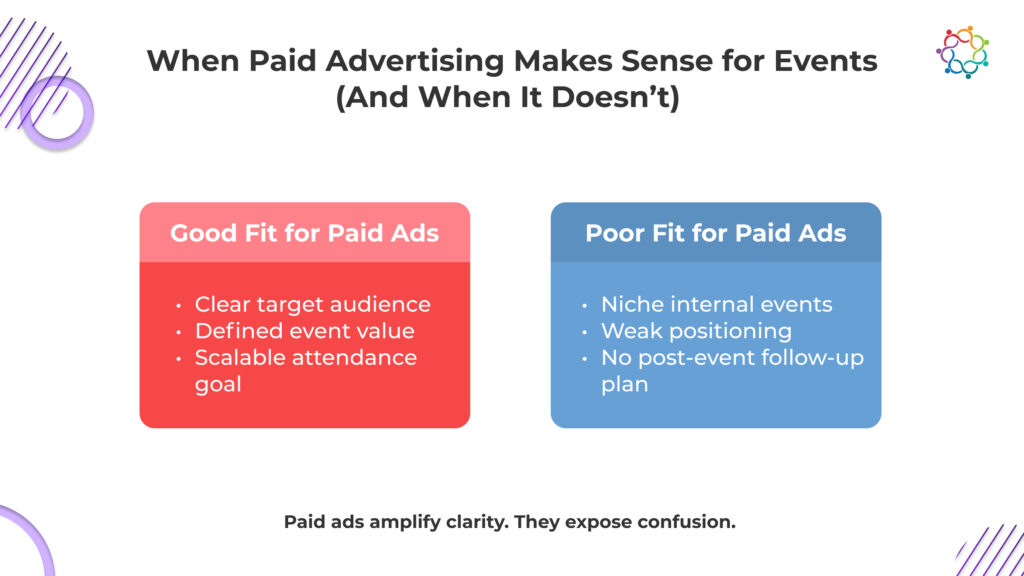

Paid promotion is powerful, but it is not universally appropriate. Knowing when to use it is the first act of responsible spending. Some events benefit significantly from paid support, while others perform better through owned, earned, or partner-driven channels.

When there are distinct purpose signals and a well-defined audience for the event, paid advertisements typically perform the best. This includes account-specific executive events, product-led workshops, and mid-funnel webinars. These formats allow targeting precision and message clarity to do real work.

By contrast, paid ads struggle when the event value is broad or ambiguous. Community meetups, early-stage brand events, or partner-heavy conferences often rely more on trust and relationships than on ad-driven discovery. In these cases, paid promotion may inflate registrations without improving attendance quality.

Teams should also recognize situations where paid spend creates false confidence. If internal alignment on audience, follow-up, or success metrics is missing, ads only mask deeper issues.

Before committing budget, ask:

Saying no to paid ads in the wrong context preserves budget and credibility.

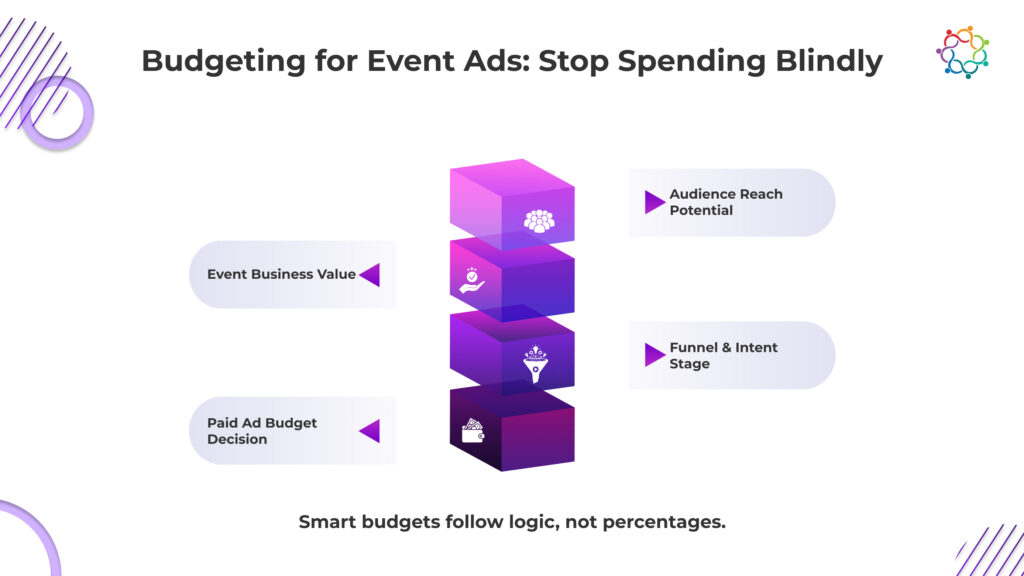

Event ad budgets often emerge from habit rather than strategy. A fixed percentage of the event budget is allocated to promotion, regardless of audience size, funnel stage, or event value. This approach feels structured, but it is not intellectual.

Effective budgeting starts with understanding what the event is meant to produce. A high-value executive roundtable with a narrow audience requires a different spend logic than a scalable product demo. Budget should reflect potential impact, not tradition.

Several factors should shape spending decisions. Audience size determines how quickly saturation occurs. The funnel stage influences how much education and reinforcement are needed. Timing matters as well. Early testing helps validate messaging and targeting, while late-stage pushes focus on conversion.

Instead of formulas, teams need guardrails. Define a maximum acceptable cost per qualified attendee. Decide in advance what signals justify scaling spend and what signals trigger a pause. This turns budgeting into a learning system rather than a gamble.

One disciplined approach is to separate the budget into two phases. An exploratory phase tests assumptions at low spend. A commitment phase scales only what proves capable of driving quality attendance. This structure protects ROI and forces accountability.

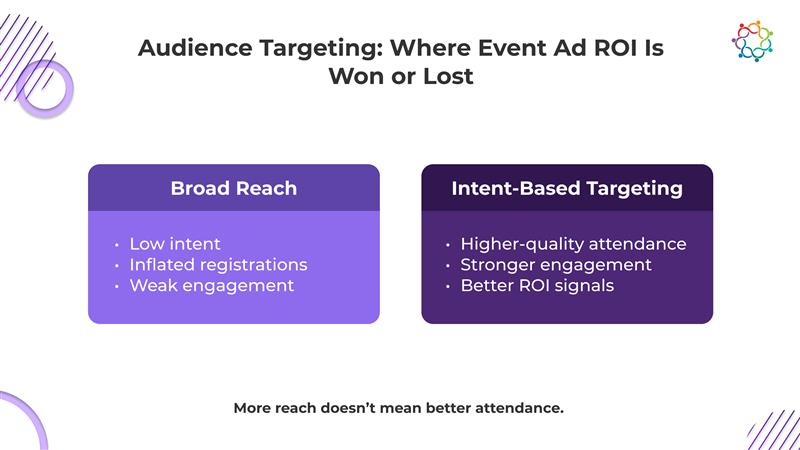

Before any discussion of platforms, budgets, or creative, audience targeting sets the ceiling for event ad ROI. Every downstream metric is shaped by who you choose to reach in the first place. When targeting is loose, paid spend buys attention without relevance. When it is precise, even modest budgets can drive high-quality attendance.

This section breaks down how targeting decisions create or destroy value, starting with the most common mistake teams make while prioritizing reach over intent.

Reach is seductive because it looks like momentum. Large audiences create the illusion of scale, but they also dilute relevance. Broad targeting inflates costs and attracts attendees with little connection to the event’s purpose.

Intent-based targeting shifts the focus from who could attend to who should attend. Signals such as prior engagement, relevant search behavior, or content consumption indicate readiness. These signals may reduce audience size, but they increase attendance quality.

In 2026, targeting decisions matter more than creative polish. Narrowing reach feels risky, yet it aligns spending with outcomes. When ads speak to an existing problem or active need, they function as confirmation rather than persuasion.

The trade-off is clear. Intent targeting limits volume but improves downstream metrics. Reach-based targeting boosts registrations while weakening ROI. Mature teams choose the former and accept lower top-line numbers in exchange for meaningful results.

First-party data remains one of the most underutilized advantages in event promotion, even as teams invest heavily in paid channels. CRM records, past attendee lists, account engagement history, and product usage signals provide a level of context that no platform-built audience can replicate. These data sources reflect real interactions, real intent, and real relationships, which makes them far more predictive of attendance quality than inferred interests.

Platform audiences and lookalikes still play an important role, but only when they are used with discipline. Left unchecked, they optimize for surface-level similarity rather than relevance. This is where many campaigns lose focus. Effective targeting anchors expansion to first-party data, using it as a benchmark rather than a replacement.