Samaaro + Your CRM: Zero Integration Fee for Annual Sign-Ups Until 30 June, 2025

- 00Days

- 00Hrs

- 00Min

The problem is not effort. It is intent. When evaluation is treated as a task to complete rather than a system to inform future choices, its impact is limited. A large number of post-event reports are archived, mentioned only once in a quarterly review, and never looked at again. They provide a cursory explanation of what transpired, but they don’t go into detail on what changed or what should be done differently going forward.

In 2026, enterprise environments are less forgiving. Budgets are scrutinized, leadership expects clarity, and scale amplifies the cost of weak decisions. Treating post-event evaluation as documentation rather than decision infrastructure is no longer sustainable. Evaluation must move beyond comfort metrics and toward signals that explain behavior, outcomes, and trade-offs. Without that shift, teams will continue to optimize execution while learning very little.

Feedback and evaluation are often used interchangeably, but they serve fundamentally different purposes. Feedback captures how people felt. Evaluation explains what happened, why it happened, and what should change as a result. Confusing the two leads to shallow conclusions and misplaced confidence.

Feedback is subjective by design. It is voluntary, influenced by recency and emotion, and skewed toward extremes. It can highlight obvious issues or confirm basic satisfaction, but it cannot reliably explain performance. Evaluation, by contrast, is a structured discipline that combines multiple signals, contextual understanding, and explicit intent to inform decisions.

At enterprise scale, this distinction matters. Leaders do not need reassurance that an event was enjoyable. They need evidence to guide investment, prioritization, and strategy. Post-event evaluation becomes valuable only when it integrates opinion with behavior, outcomes, and comparison.

A useful way to frame the difference is simple:

Feedback answers how attendees reacted.

Evaluation answers what the organization learned.

When teams rely solely on feedback, they mistake sentiment for signal. A disciplined evaluation approach acknowledges the limits of opinion and deliberately incorporates event performance measurement, engagement patterns, and downstream impact. This is not about abandoning surveys. It is about placing them in their proper, limited role within a broader system.

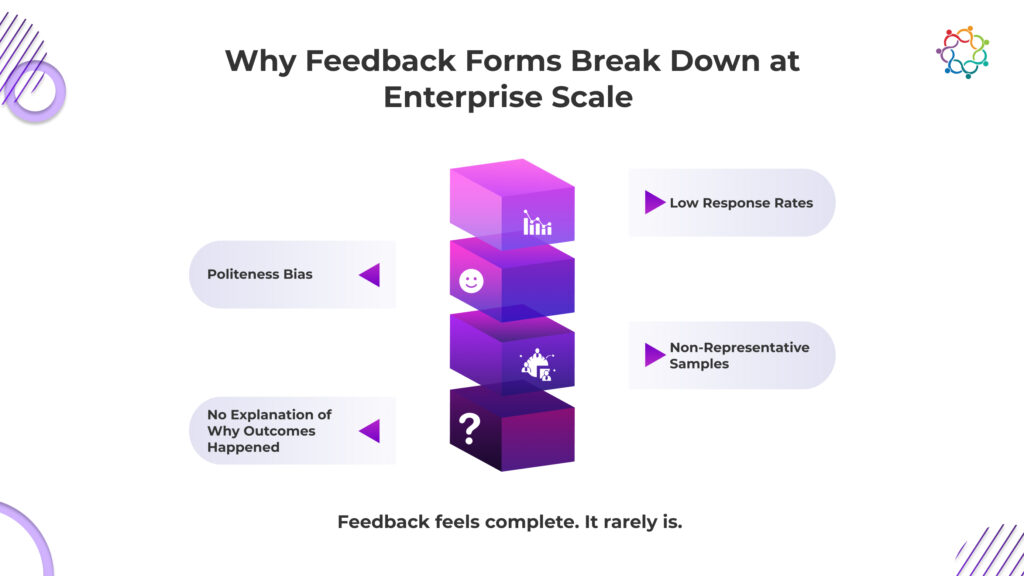

Feedback mechanisms degrade as scale increases. What works for a single workshop or small event becomes unreliable across dozens of events, regions, and audiences. The failure is structural, not tactical.

Response rates decline as audiences grow and survey fatigue increases. Those who respond are rarely representative of the full attendee base. Politeness bias further distorts results, especially in B2B contexts where relationships matter. Attendees often provide positive ratings to be courteous, not because the experience delivered value.

More importantly, feedback forms cannot explain causality. They can indicate that satisfaction was high or low, but they cannot reveal why certain outcomes occurred. They do not connect engagement depth to business results. They do not account for differences in audience intent, event objectives, or market context.

As enterprise teams attempt event ROI measurement using survey averages, they encounter false precision. A score of 4.3 out of 5 feels definitive but provides no guidance on what to adjust. This creates a dangerous loop where teams repeat formats that feel successful while ignoring underperforming signals hidden beneath positive sentiment.

At scale, reliance on feedback alone produces noise, not insight. Recognizing this breakdown is the first step toward a more resilient post-event evaluation model that values explanation over reassurance.

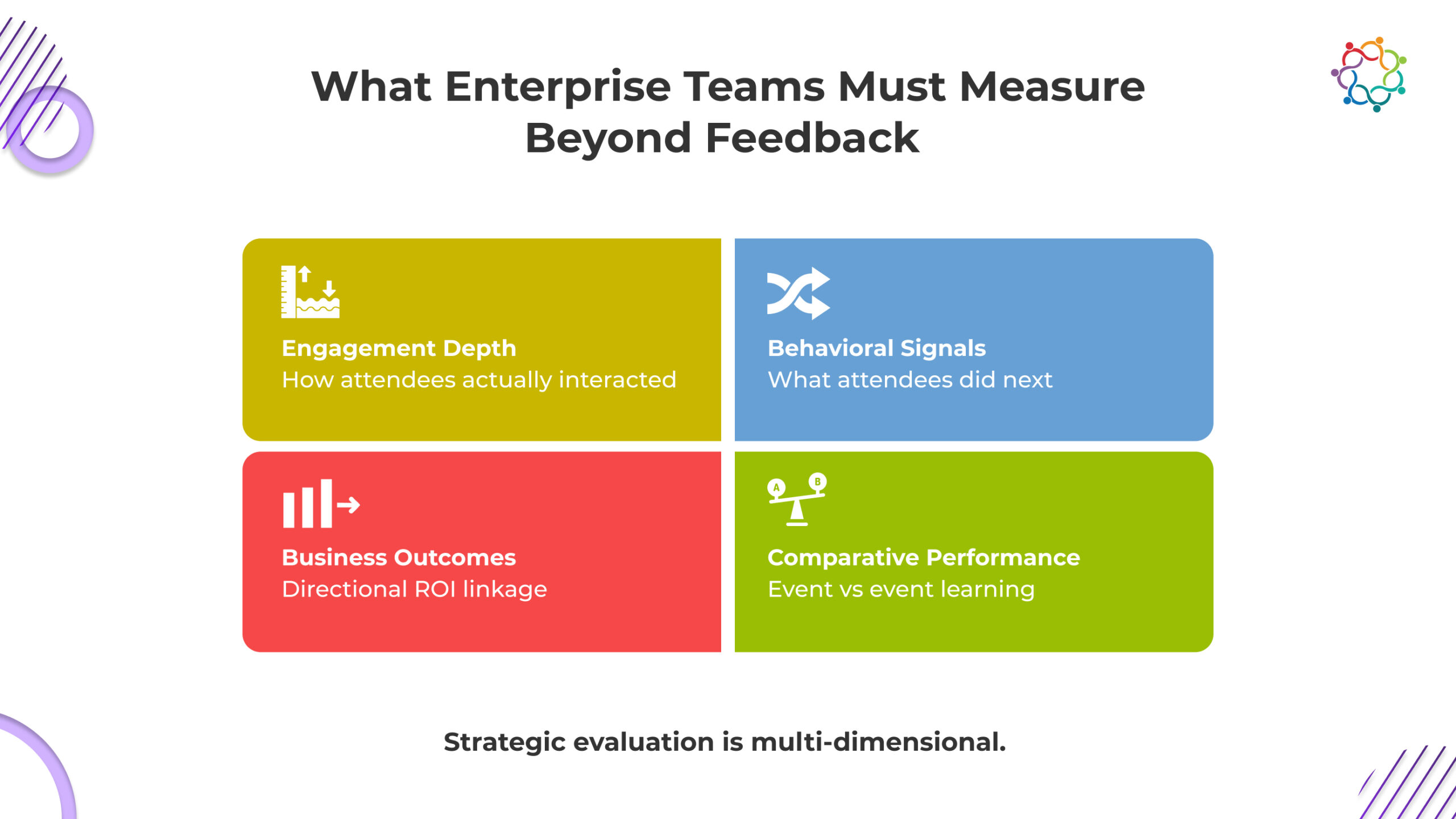

Before diving into specific metrics, it is important to reset how measurement is approached. Moving beyond feedback does not mean adding more numbers or building heavier dashboards. It means identifying signals that explain value. Enterprise teams must measure what reflects attention, intent, and impact across the event lifecycle. These signals should reveal how audiences actually behaved, how that behavior connected to business outcomes, and how performance compares across events.

This section outlines the core dimensions that matter once evaluation shifts from opinion collection to decision support. Each dimension answers a different strategic question, and together they form a complete evaluation system.

Attendance is an entry condition, not a value indicator. Enterprise teams that equate presence with success miss the most important signal: how deeply participants engaged. Engagement depth reveals whether an event held attention, delivered relevance, and justified the time investment.

Depth can be observed through patterns rather than opinions. Session drop-offs show where interest declined. Interaction frequency highlights moments of curiosity or confusion. Time spent indicates whether the content sustained attention beyond obligation.

One effective way to approach this is to look for gradients, not totals:

Compare early versus late session participation.

Identify which segments stayed engaged longest.

Examine interaction intensity across formats.

Engagement depth predicts value because it reflects voluntary behavior. People disengage quietly when content does not resonate. Measuring this behavior provides a more honest assessment than satisfaction scores.

When engagement metrics are treated as event engagement metrics rather than vanity numbers, they help teams refine agendas, formats, and pacing. Over time, these patterns form a baseline for post-event evaluation that focuses on how value was actually consumed.

Behavior is a stronger indicator of intent than stated preference. What attendees do during and after an event reveals what mattered to them. Behavioral signals cut through bias because they require effort.

During events, signals include content downloads, questions asked, polls answered, and meetings requested. After events, follow-up actions such as resource access, demo requests, or continued conversations provide further evidence of impact.

These behaviors should be interpreted collectively, not in isolation. A single download may mean little. A cluster of related actions suggests momentum. Event feedback analysis becomes more credible when anchored in observed behavior.

Behavioral data also bridges the gap between marketing and sales. It shows where interest translated into action without forcing strict attribution. This allows teams to discuss event performance measurement in terms of influence rather than credit.

Incorporating behavioral signals elevates post-event evaluation from retrospective commentary to forward-looking insight. It highlights which experiences moved audiences from passive consumption to active engagement.

Enterprise leaders ultimately care about outcomes, but simplistic attribution models often obscure more than they reveal. The goal is not to prove that an event caused a deal, but to understand how it influenced movement within the business system.

Outcome linkage should focus on direction and proximity. Did targeted accounts progress after engagement? Did sales cycles shorten? Did pipeline velocity change for attendees compared to non-attendees? These questions support event ROI measurement without overpromising precision.

Avoiding attribution jargon helps maintain credibility. Instead of claiming direct revenue impact, frame insights around influence and acceleration. This aligns evaluation with how complex buying decisions actually unfold.

A practical approach includes:

Comparing the pipeline behavior of engaged versus unengaged accounts

Tracking deal stage movement post-event

Observing changes in sales conversations initiated

These linkages transform post-event evaluation into a strategic lens rather than a defensive report. They inform where events contribute meaningfully within a broader go-to-market strategy.

Evaluation without comparison is guesswork. Single-event analysis lacks context. Enterprise teams operate portfolios, not isolated experiences, and evaluation must reflect that reality.

Comparative analysis answers questions that standalone metrics cannot. Which formats outperform others for specific audiences? Which regions show stronger engagement depth? Which objectives consistently underdeliver?

Comparison should be intentional and scoped. Event versus event, format versus format, and audience versus audience comparisons reveal patterns over time. This supports measuring event success as a relative, evolving standard rather than a static benchmark.

One structured comparison per dimension is sufficient. Overloading analysis creates confusion. The objective is to surface differences that warrant decision-making, not to rank everything.

By embedding comparison into post-event evaluation, teams shift from anecdotal learning to portfolio intelligence. This is where evaluation begins to shape strategy rather than validate execution.

(Also Read: What Is Post-Event Evaluation and Why Is It Critical for Enterprise Events?)

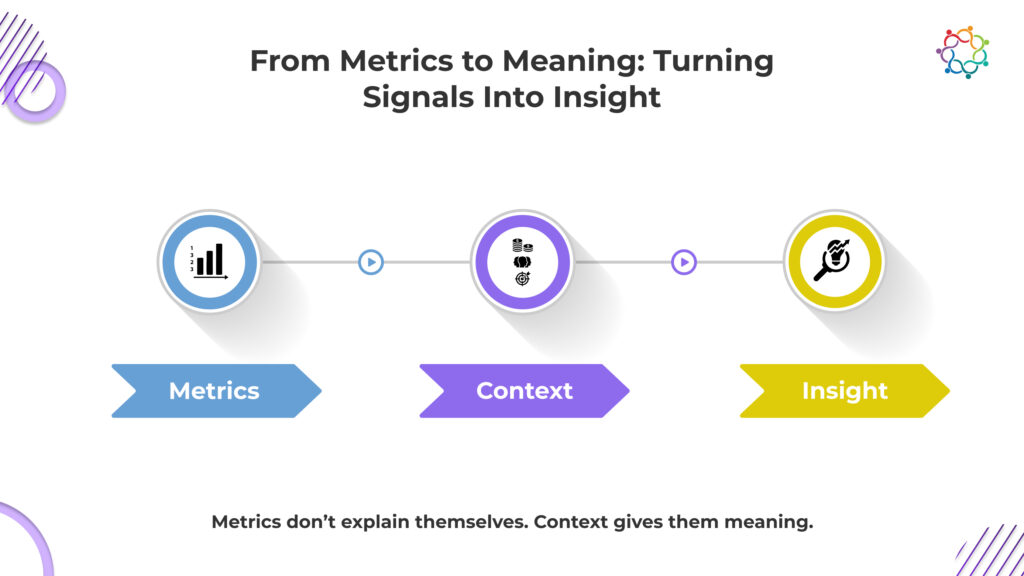

Collecting metrics is easier than interpreting them. Raw data does not explain itself, and without context, even accurate metrics can mislead. Insight emerges only when signals are framed against intent, cost, and constraints.

Contextual interpretation requires asking disciplined questions. What was the objective of this event? Who was the audience? What trade-offs were made in design and spend? Without these anchors, event reporting best practices devolve into dashboards that look impressive but guide nothing.

Meaning is created through synthesis. Engagement depth gains significance when compared to audience expectations. Behavioral signals matter more when aligned with account strategy. Outcome linkage is useful only when viewed alongside investment levels.

Teams should focus on translating observations into implications. An observation states what happened. An implication explains what should change. This step is often skipped, leaving insights trapped in analysis.

Post-event evaluation becomes valuable when it trains teams to think, not just to measure. Metrics are inputs. Insight is the output that influences future decisions.

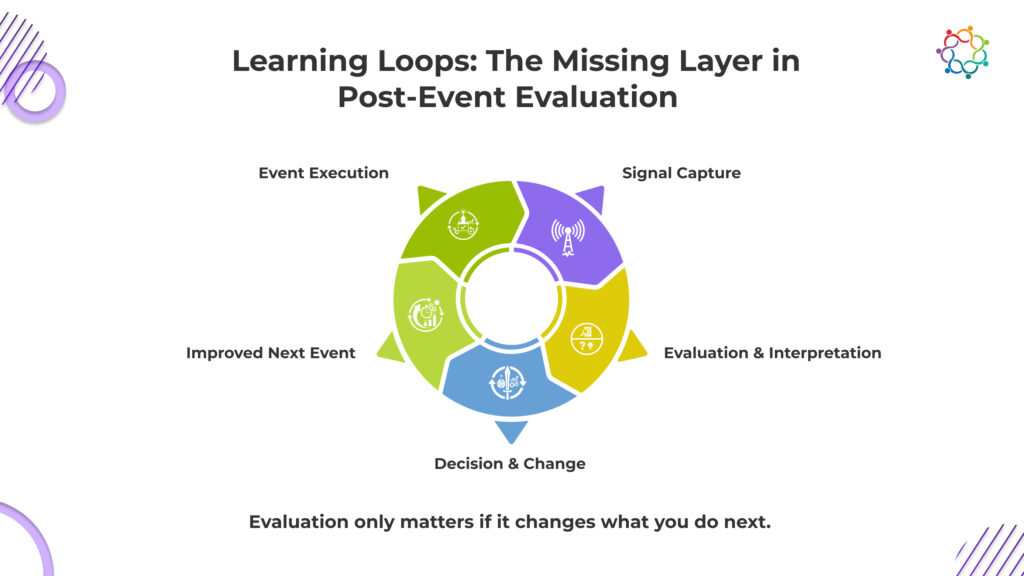

Most insights die in decks because there is no mechanism to carry them forward. Learning loops close that gap. A learning loop connects evaluation to action and then back to evaluation again.

A true learning loop has three properties. It captures insight, assigns ownership, and influences a future decision. Without all three, learning remains theoretical.

Learning loops should explicitly shape:

Targeting choices

Messaging emphasis

Format and experience design

Budget allocation across events

This is where post-event evaluation becomes strategic infrastructure. It ensures that each event contributes to cumulative understanding rather than isolated reporting.

Learning loops also enable continuous improvement. They create memory across teams and time. Instead of repeating assumptions, teams test and refine them. This aligns evaluation with the pace and complexity of enterprise operations.

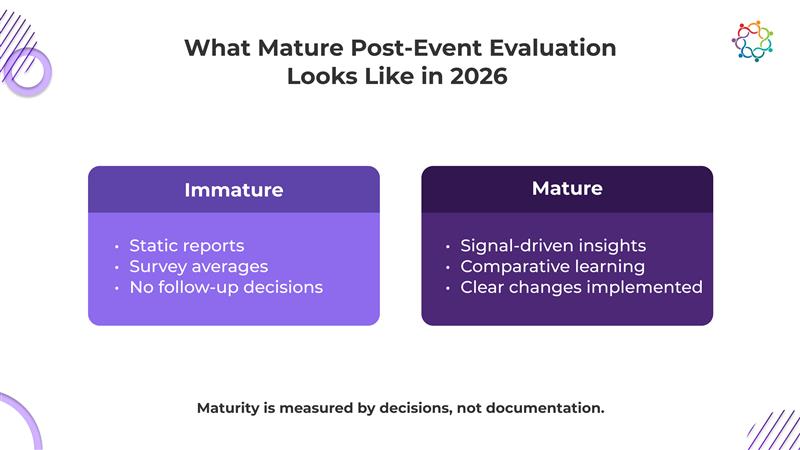

Maturity is not about more data. It is about better judgment. Mature evaluation systems prioritize clarity, relevance, and accountability.

In 2026, advanced teams exhibit consistent traits. They track fewer metrics but understand them deeply. They assign clear ownership for insights and decisions. They view evaluation as a longitudinal process rather than a per-event task.

Characteristics of maturity include:

These teams treat evaluation as a capability, not a report. They invest in thinking as much as in measurement. Post-event evaluation becomes a shared language for learning and improvement across the organization.

Evaluation that does not influence decisions is documentation, not learning. Many enterprise teams invest significant time collecting data after events, yet very little of it shapes what happens next. Activity is reviewed, numbers are acknowledged, and reports are filed away. The appearance of rigor replaces actual improvement. In a climate of tighter budgets and higher executive scrutiny, this gap is no longer harmless. Every evaluation effort must justify itself by improving future choices, not by proving work was done.

This requires a fundamental shift in how evaluation is valued. Surveys and feedback forms still matter, but only within clear limits. They capture sentiment at a moment in time and from a narrow slice of the audience. On their own, they cannot explain behavior, intent, or business impact. Insight comes from observing what people actually do, comparing performance across contexts, and linking engagement to outcomes within the realities of cost, audience, and objectives.

When post-event evaluation is treated as strategic infrastructure, it stops being a closing ritual. It becomes a forward-looking system that informs targeting, experience design, and investment decisions. Evaluation earns its place only when it changes future action. If it does not alter priorities, formats, or resource allocation, it should be questioned, simplified, or replaced.

(If you’re thinking about how these ideas translate into real-world events, you can explore how teams use Samaaro to plan and run data-driven events.)

Built for modern marketing teams, Samaaro’s AI-powered event-tech platform helps you run events more efficiently, reduce manual work, engage attendees, capture qualified leads and gain real-time visibility into your events’ performance.

Location

© 2026 — Samaaro. All Rights Reserved.